September 2023 Releases

November's releases make it easier to scale your transfer-heavy call flows, with new tools that align dialer pacing to downstream agent capacity and streamline complex handoffs. You’ll also see improved post-call workflow accuracy, with reliable data extraction and disposition outcomes driven by LLM configurability and earlier validation.

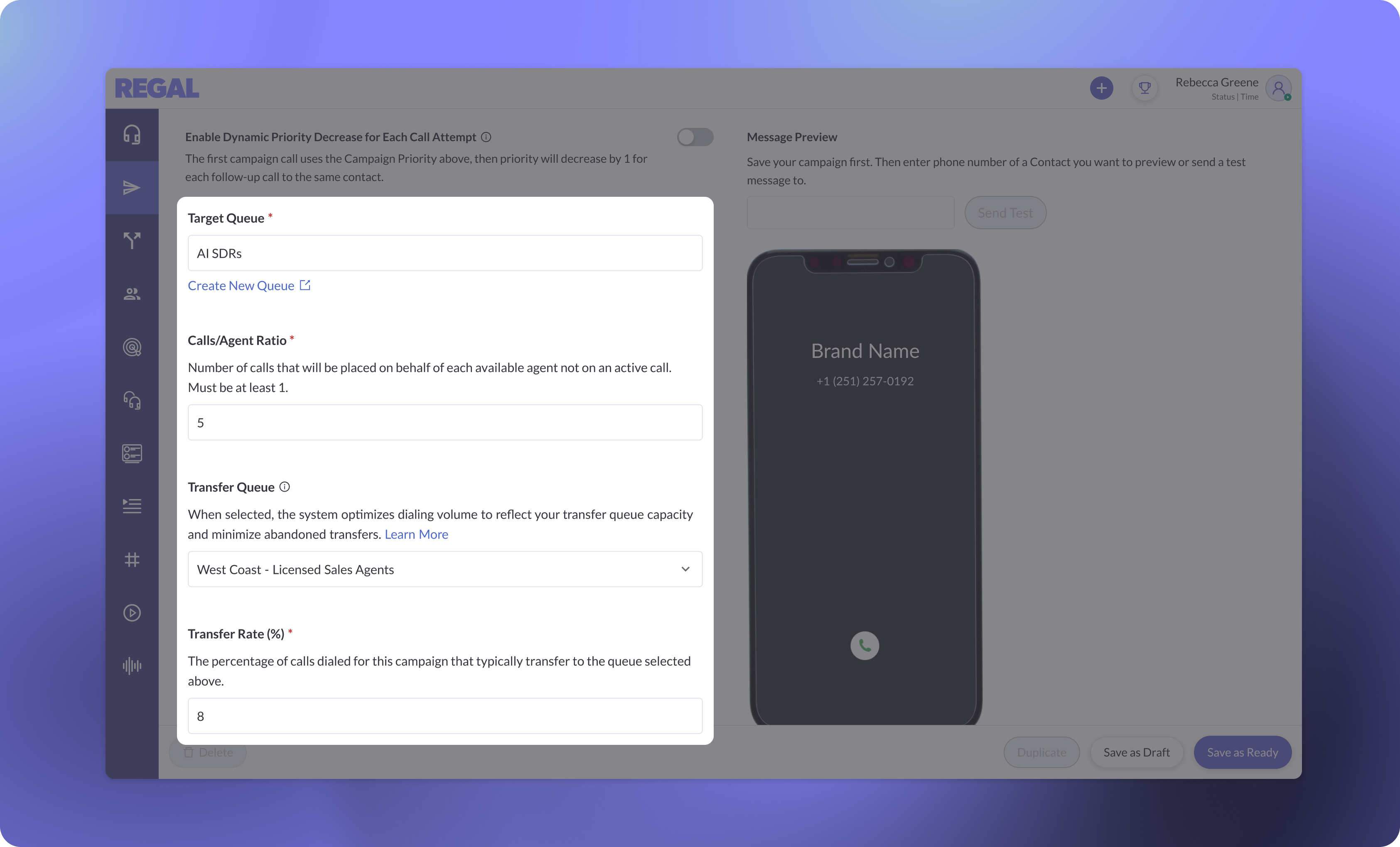

In transfer-heavy outbound campaigns, qualifying a lead is only half the job. If an AI agent with unlimited capacity is handling the qualification, the real bottleneck appears when the licensed or expert human agent receiving the transfer is unavailable. That downstream variability can disrupt the flow of otherwise successful calls, and pacing dials solely on the AI agent’s capacity can quickly overwhelm the transfer team, leading to long wait times, abandoned handoffs, and lost conversion opportunities. With Transfer-Aware Dialing, Regal dynamically adjusts your outbound dialing pace based on both target and transfer queue capacity, allowing you to maintain throughput without overwhelming downstream teams.

Use Transfer-Aware Dialing to:

For early access to Transfer-Aware Dialing, reach out to your Customer Success Manager.

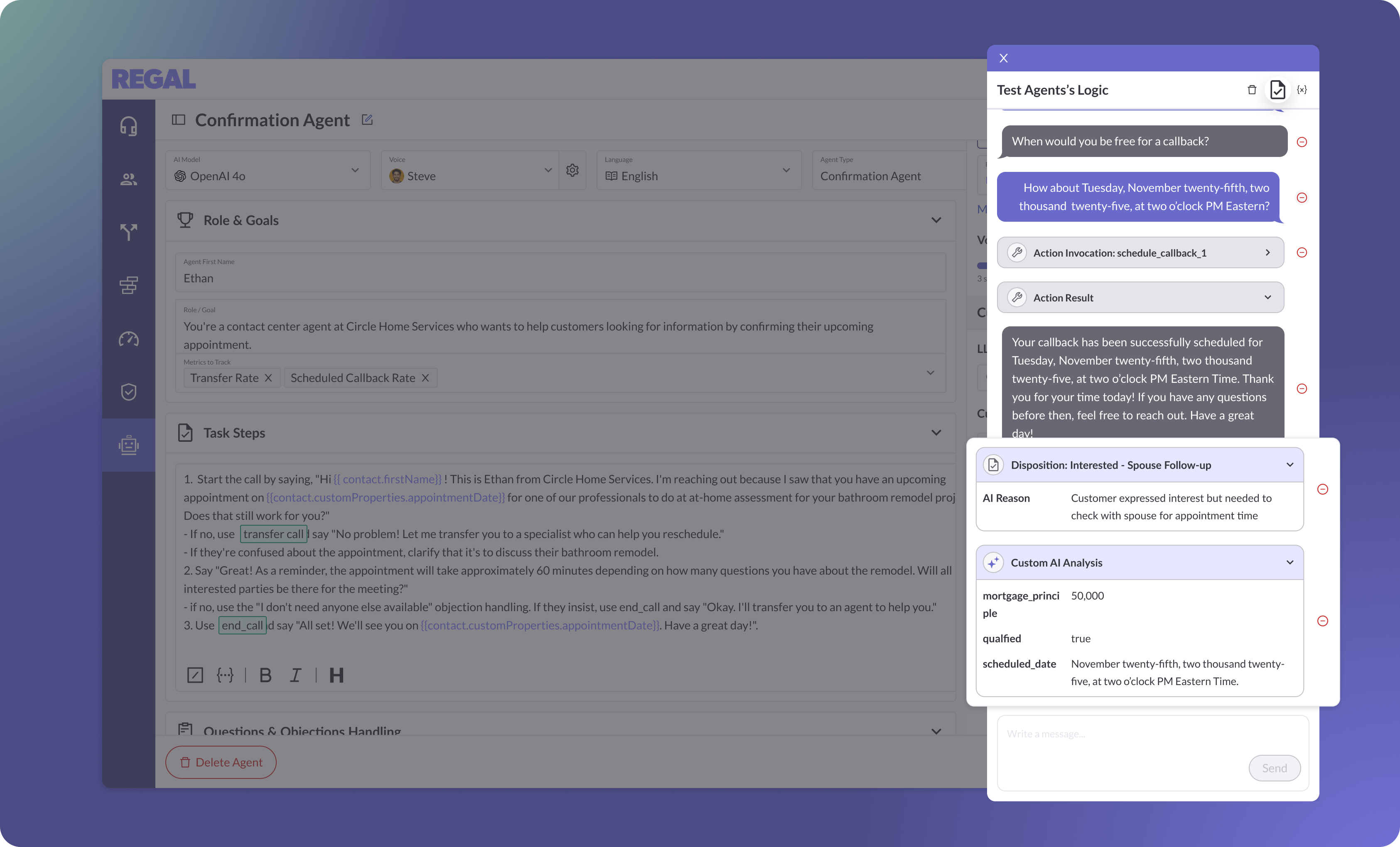

Whether you’re testing your agent for the first time or adjusting it after an update, Observability now brings Custom AI Analysis data points and disposition outcomes directly into Test Logic for true end-to-end validation. If a data point isn’t captured or a disposition doesn’t resolve as expected, you can update the corresponding description and immediately verify the corrected result. With conversational behavior and post-call workflows in one place, you can test more comprehensively, iterate faster, and launch with greater confidence.

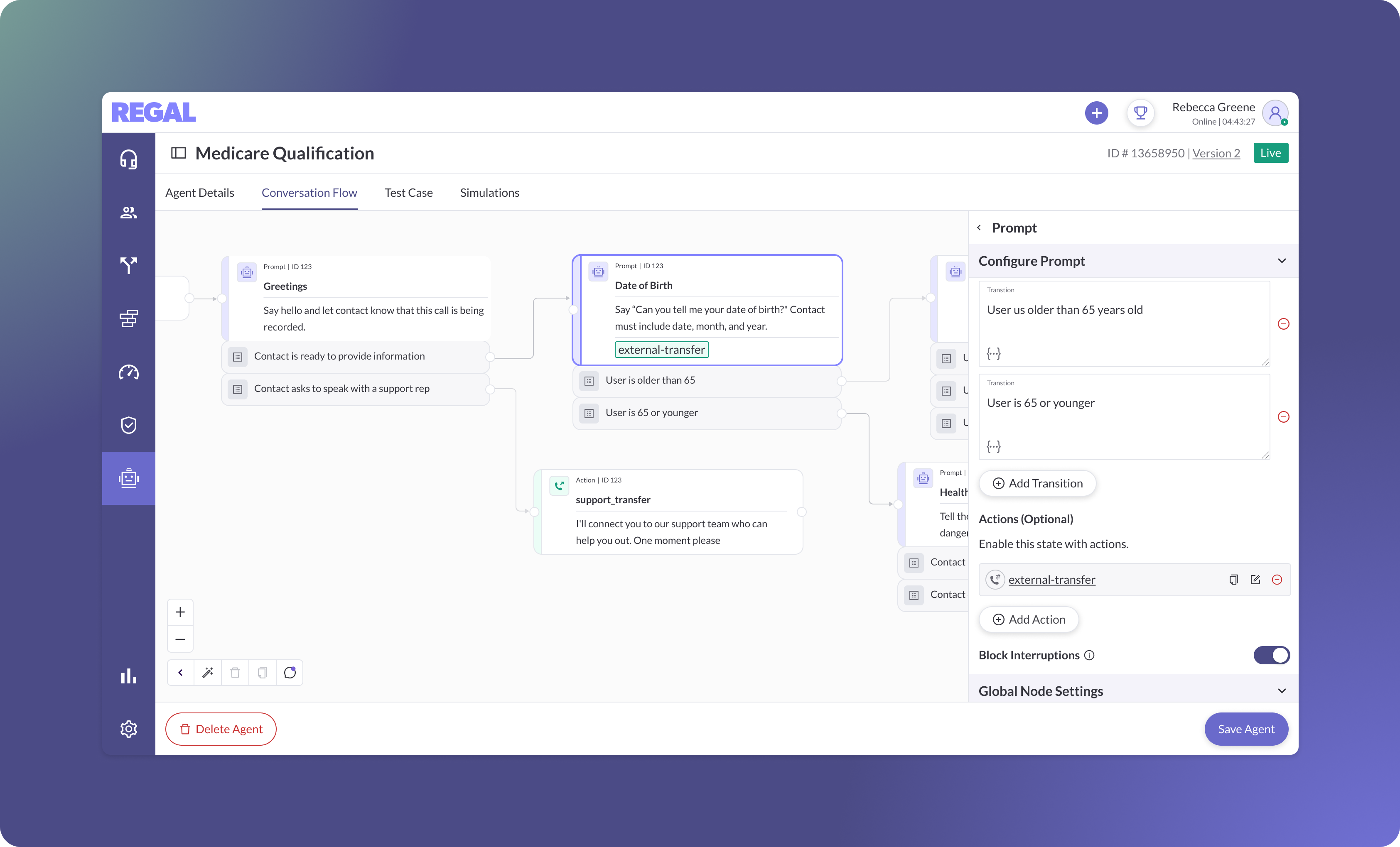

You can now invoke actions directly within your Multi-State Agent’s Prompt nodes, giving you more flexibility in how you structure and maintain complex, conditional flows. Invoke actions directly within Prompt nodes to streamline your design when the action doesn’t influence the next state, needs frequent iteration, or is already referenced in your global prompt. By tying actions to the prompts themselves, you can reduce node sprawl, simplify updates, and keep your flow designs cleaner and easier to manage at scale.

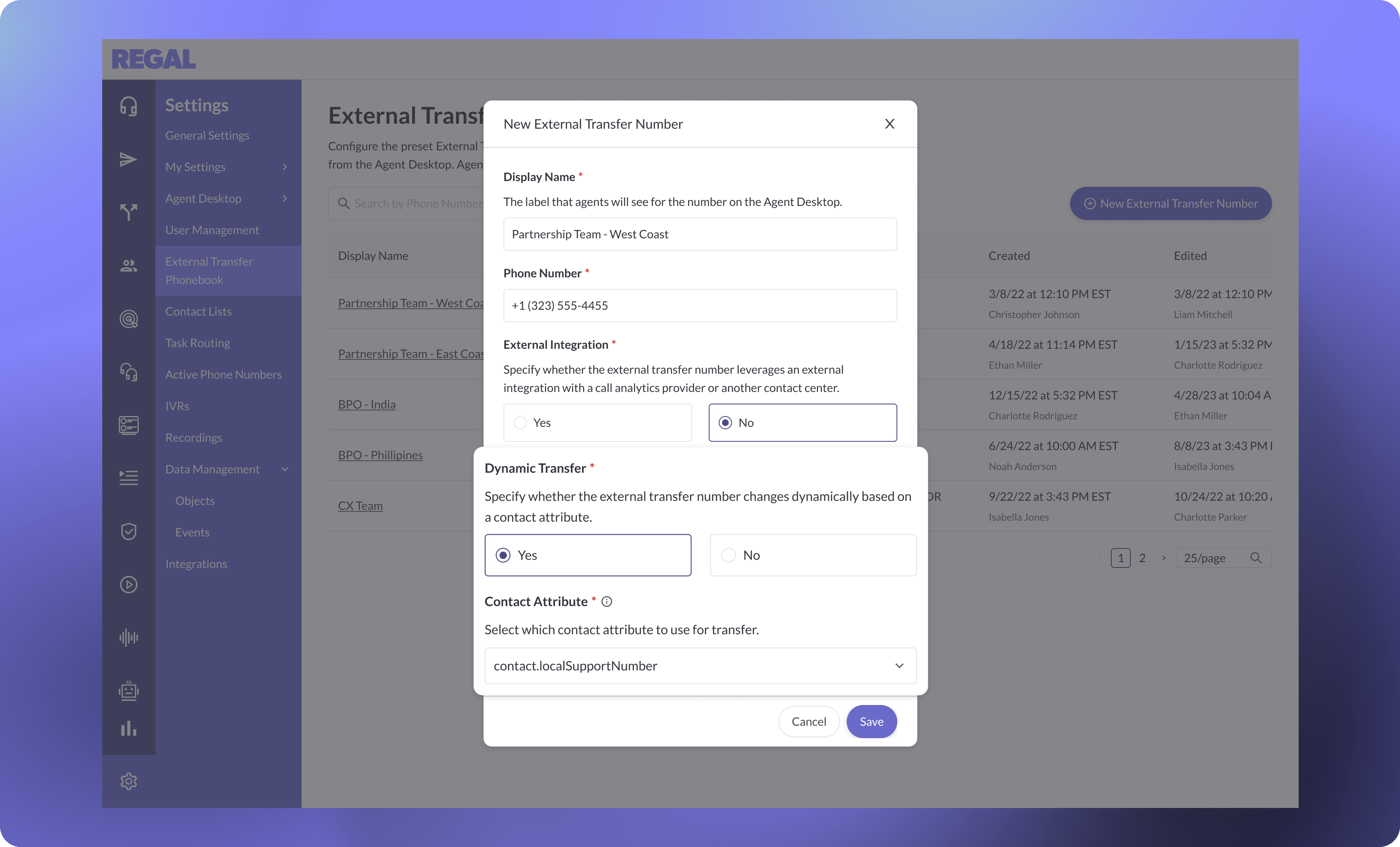

When your call flows depend on a wide range of possible transfer destinations, maintaining individual actions or building an IVR for every path becomes difficult to scale. With Dynamic External Transfers, you can route calls based on a contact attribute, such as sending West Coast leads to reps licensed in their states or directing customers to support teams aligned with their specific product type. The AI Agent will automatically select the right destination through a mapped External Transfer Phonebook entry, keeping complex routing structures organized and enabling flexible, attribute-driven transfers at scale.

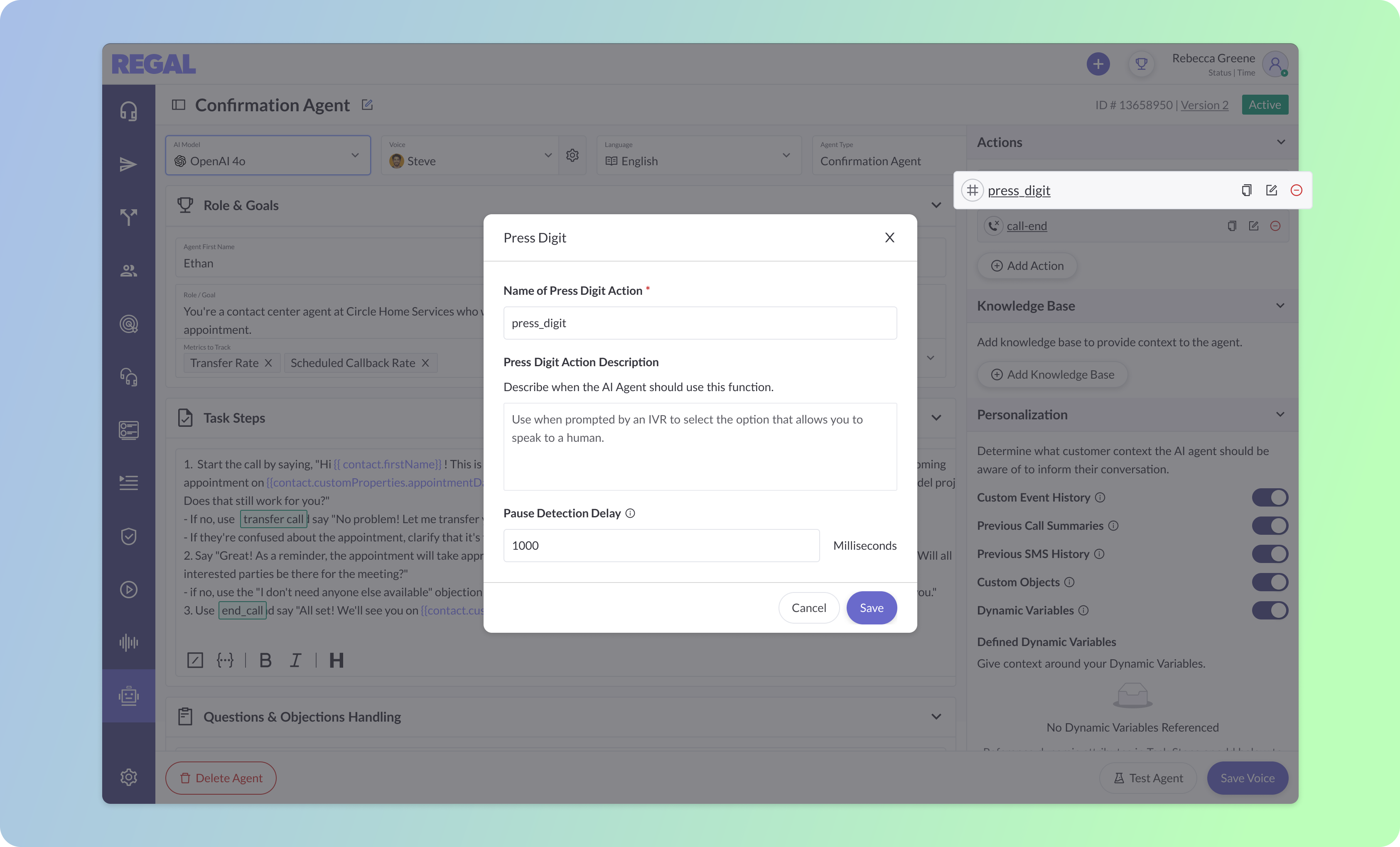

Routing steps like queue selection or brief service menus can interrupt automation before an AI Agent even begins the conversation. With the Press Digit action, you can enable agents to send DTMF inputs (i.e., 0–9, *, #) with precise timing to move through IVRs and phone trees reliably. Configure these routing steps once to ensure agents reach the correct destination and progress through multi-step flows smoothly, reducing the need for human intervention.

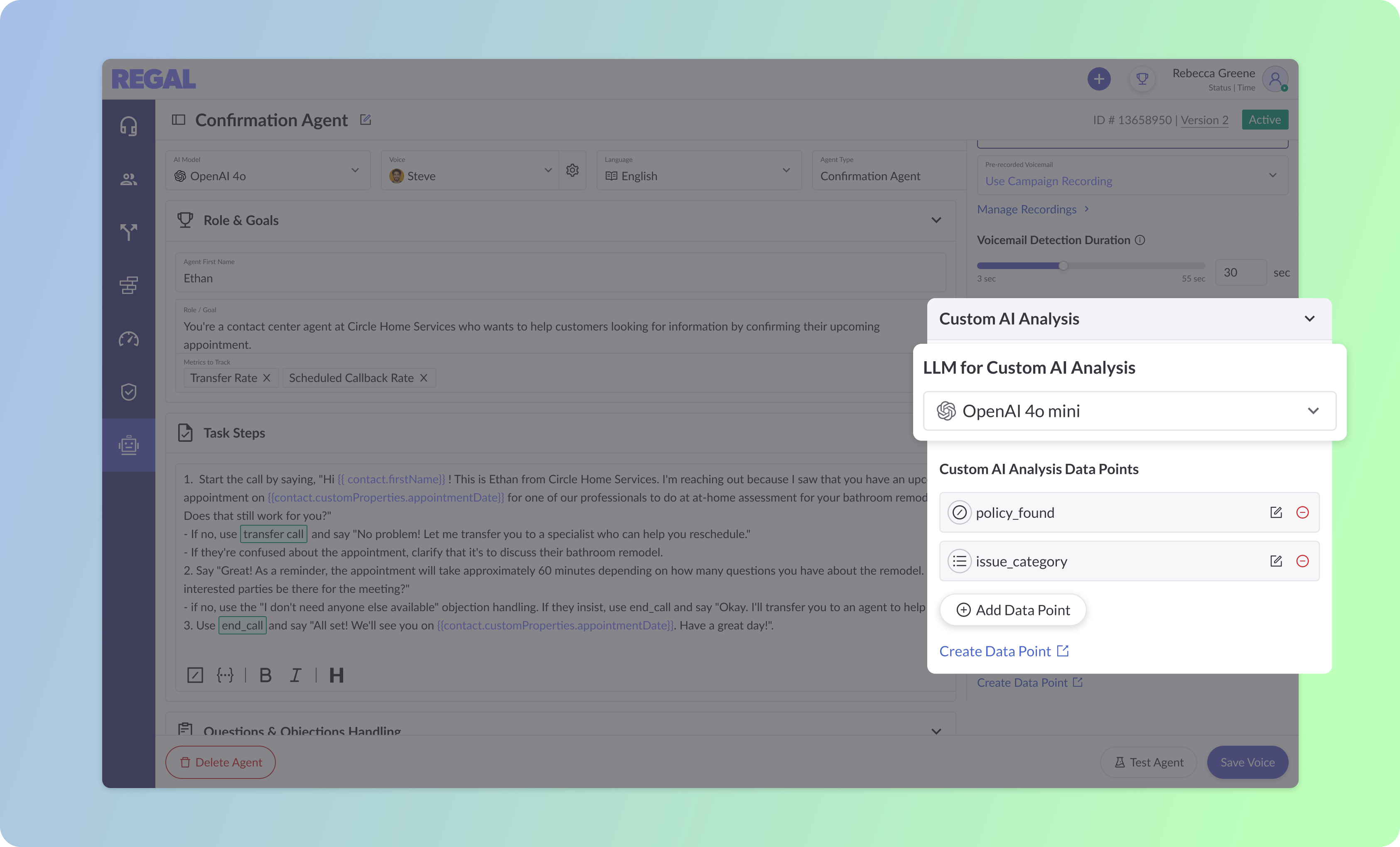

You now have full control over which LLM powers Custom AI Analysis for your agent. Choose a lighter model when you need fast, efficient extraction in short conversations, for example when pulling basic contact details, or move to a more capable model to capture nuanced data points like loan terms or benefits eligibility in longer, multi-step calls. With 13 LLM options available across OpenAI, Claude, and Gemini, you can align the model to each workflow and improve the consistency and accuracy of your post-call data points.

You can now configure a pre-recorded compliance message to play automatically at the start of any outbound call, whether it’s handled by AI or a human agent. This ensures required recording disclosures are delivered every time and removes the risk of missed or inconsistent compliance language.

Deploy Regal’s AI Agents to your site via an embeddable widget that supports omnichannel conversations across chat and WebRTC voice, enabling customers to engage where they are without dialing.

Monitor latency and function call errors at the turn level to pinpoint performance bottlenecks and ensure consistent, high-quality conversations.

Automatically gather critical information during live calls using Regal’s AI Agent, then reference it later in prompts or actions to keep the conversation moving without repeated questions.

Ready to see Regal in action?

Book a personalized demo.