September 2023 Releases

In the recent past, LLMs couldn’t be directed with enough technical precision to handle the full complexity of enterprise sales and support conversations.

But now? AI can handle virtually any conversation. Multi-turn, multi-task, multi-condition…

It’s no longer a question of, “can AI handle this?” It’s more a question of, “how can we build the AI to handle this?”

This is thanks to the introduction of “states” into AI agent builds. A multi-state (i.e. multi-prompt) architecture has changed the way AI agents operate. For good. And with Regal’s native drag-and-drop <Multi-state Agent Builder>, it’s easier than ever to construct and iterate on agents that can power the sophisticated conversations embedded into your customer lifecycles.

But it also raises a new question: When should you stick with a single-state (i.e. single prompt) agent versus moving to a multi-state agent? How do you make sure you’re not overcomplicating AI builds, making sure your time and money investment is aligned to the level of complexity and impact of each flow?

In this article, we’ll go in-depth on what single-state and multi-state agents actually are, their capabilities, and when you should use each.

You’ve probably seen different terms thrown around. Some call it “multi-agent,” others multi-state.

In practice, they’re describing the same underlying concept—breaking AI agent tasks into smaller, manageable units so the AI isn’t relying on one giant prompt to handle everything.

It’s called “multi-state” for a reason: In the context of customer support, the idea is that one voice agent is handling multiple states (i.e. all possible scenarios) per one conversational path, the same way a human agent would.

How it Works: A multi-state agent uses two layers of instruction. You have one global prompt, which effectively personifies the agent. Then state-level prompts that work in tandem with the global prompt to give instruction on specific tasks and actions.

This framing makes it clear that you’re building one phone agent that can flex across all the states your enterprise conversations demand.

1. Global Prompt

This defines persistent attributes of the agent—job description, default voice, style and tone, guardrails, objection-handling, and default knowledge bases. This acts as the agent’s operating system, applied universally across every interaction.2. State-Level Prompts

These are scoped instructions tied to specific points in a conversation flow. Each state defines:

.png)

The landscape of voice and chat-based intelligence has evolved very rapidly over the past two decades.

From basic chatbots in the early 2010s (think, Apple’s Siri and Amazon Alexa), through to IVR and IVA—all of which relied on rigid decision trees, with little to no ability to adapt beyond predefined paths.

Then, GenAI and LLMs were introduced to the process, giving voice agents the flexibility to handle multi-turn conversations. For the first time, they could sound natural and cover real, predictable purpose-fit workflows.

Multi-state agents represent the next evolutionary step in this progression, allowing more dynamic orchestration per conversation state, with the ability to cover more end-to-end conversations than any AI option to date.

.png)

In practice, this means multi-state will eventually replace single-state as the default design pattern.

But today, single-state still offers situational benefits when matched to the correct use cases—keeping implementation faster, and prompt management simpler.

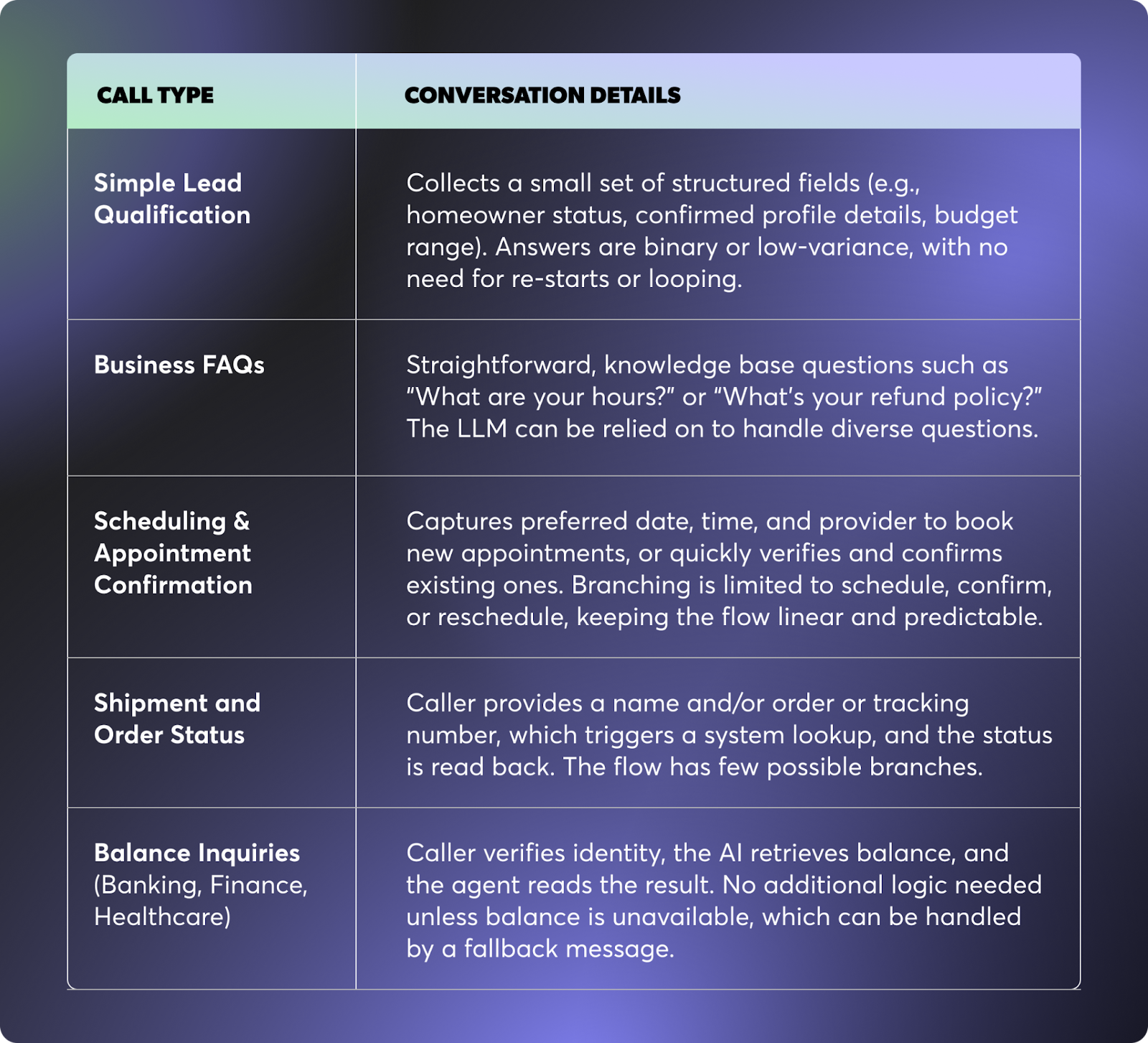

So, when is it still beneficial to use single-state AI Agents?

A single-state agent operates from one global prompt. Everything (persona, guardrails, objection handling, and tone) is applied to every interaction.

Single-state agents thrive in short, linear workflows where conversations are predictable, contain little conditional splits, and rarely need to loop back or restart.

These tasks involve very straight-line decision-making, so a single global prompt can contain all required paths without bloat.

Workflow: A home remodeling company qualifies inbound leads for sales follow-up.

Steps the agent takes:

Why single-state works: This is linear, with only one or two conditional splits. All instructions can live in one global prompt without creating context bloat. The LLM applies tone, guardrails, and instructions consistently across a predictable sequence.

Workflow: An e-commerce contact center handles “Where’s my package?” inquiries.

Steps the agent takes:

Why single-state works: This is purely transactional, with one retrieval and one response path. There’s no branching logic beyond “delivered” vs. “in transit.” A single global prompt ensures consistency without requiring state-level orchestration.

A multi-state agent separates instructions into a global prompt (consistent identity and style) and state-level prompts (scoped instructions for each node in the flow).

The agent advances from state to state. At each step, old instructions are dropped and new ones are loaded, minimizing ambiguity and keeping execution precise.

Strengths:

When to use multi-state:

Multi-state becomes critical when the conversation requires layered decision-making, conditional paths, and frequent pivots. Instead of stuffing every possibility into one giant prompt, you scope instructions at the state level. Each node tells the agent exactly what to do at that point—what data to collect, which knowledge base to use, what API call to trigger, or how to branch if a condition is met.

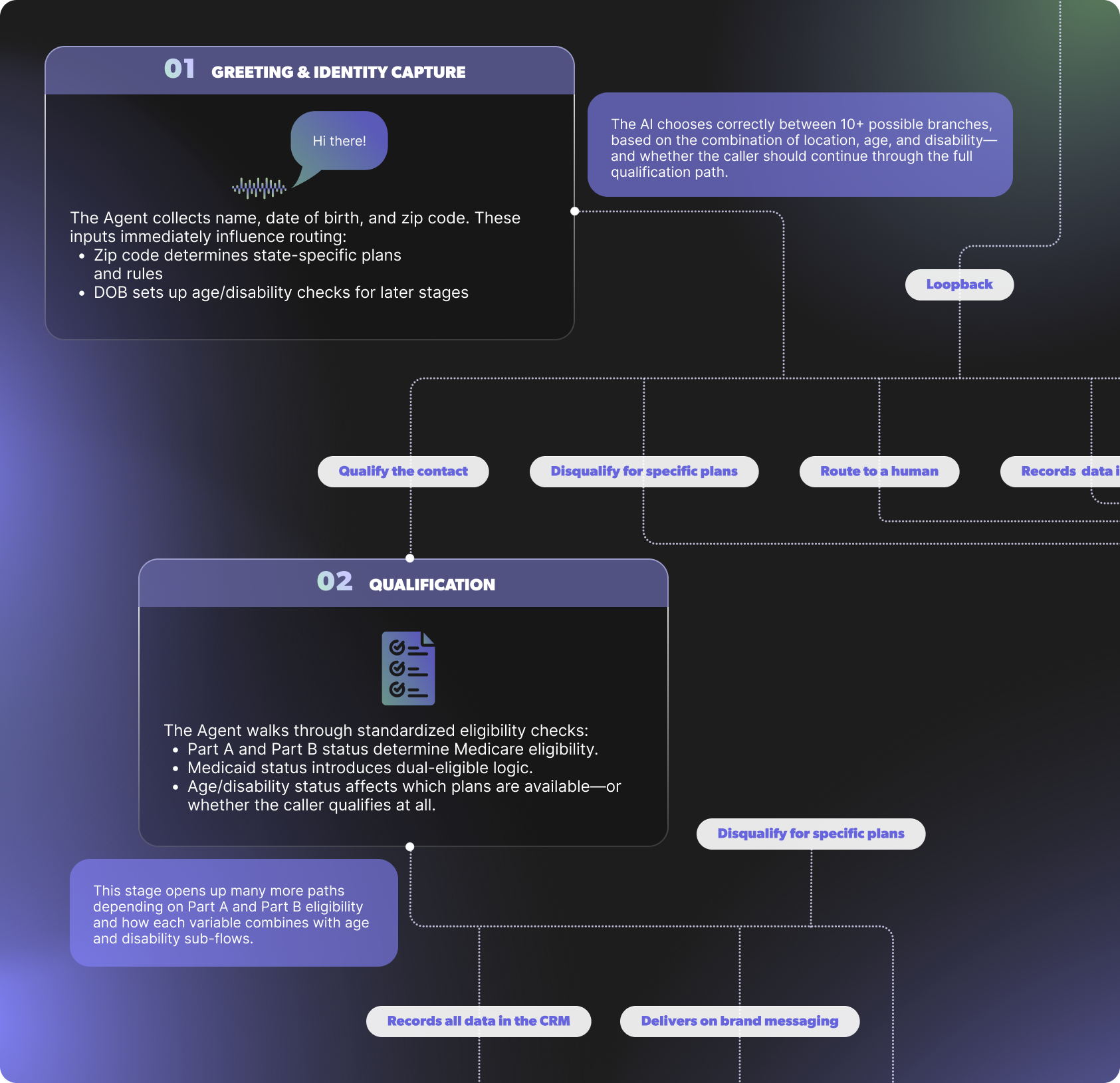

Workflow: An insurance provider qualifies callers for Medicare Advantage.

The components of that flow could look like:

The Agent collects name, date of birth, and zip code. These inputs immediately influence routing:

Even from this first step, there are already 10+ potential branches the conversation can take, based on the combination of location, age, and disability—and whether the caller should continue through the full qualification path.

The Agent walks through standardized eligibility checks:

This stage opens up many more paths depending on Part A and Part B eligibility and how each variable combines with age and disability sub-flows.

Across dozens of possible permutations, the AI Agent applies logic to:

At each turn in this conversation, regardless of path, the Agent:

This ensures that every outcome—whether qualification, disqualification, or exception—results in the correct technical and compliance steps.

Without multi-state orchestration, this interaction would collapse into an overloaded global prompt, creating ambiguity in problem handling, higher failure rates in dispatch accuracy, and poor visibility into where the flow breaks down.

Multi-state is the next step in the evolution of AI Agents. It subsumes everything single-state can do, while unlocking the structure needed for complex, non-linear enterprise workflows. Performance is always more predictable and scalable when instructions are scoped state by state.

That said, single-state still has an important role today. For simple, transactional flows, it offers faster implementation, lower runtime cost, and minimal maintenance overhead.

The real decision for enterprises isn’t which is better overall—it’s which matches the complexity of the conversation and the business requirements at hand.

Single-State = Simplicity and Speed — best when cost and deployment velocity outweigh complexity.

Multi-State = Structure and Scale — best when workflows demand compliance enforcement, deep integration, and fine-grained control over every decision point.

Having access to both approaches gives enterprises the flexibility to balance cost, complexity, and control. Regal's AI Agent Builder offers support for both single and multi-state flows, so that no matter your use case, you can ensure your agents perform with precision at scale, while remaining practical to implement. Schedule a demo with our team to discuss which approach is the best fit for your next AI agent build.

Ready to see Regal in action?

Book a personalized demo.