September 2023 Releases

Enterprise support and sales conversations are anything but linear.

They branch in dozens of directions. They loop. They have multiple conditions and possible transitions at every turn.

In order to ensure that your AI agents deliver personalized, outcome-driven experiences at scale, they need to be built in a way that can match the non-linear structure and complexity of enterprise conversation flows.

How you manage the prompt, trigger data retrieval, and inform decision flow logic are all major points of control for AI agent performance. They inform how many types of conversations your agent can handle, if they handle them in a way that’s enjoyable for the customer, and whether they’re efficiently driving outcomes for your business at scale.

Regal’s Multi-State AI Agent builder is a purpose-built way to design multi-turn, multi-decision conversation flows, while embedding your distinct business logic at every turn.

It’s a non-linear interface for non-linear conversation paths.

Regal’s Multi-State AI Agent Builder splits up prompting across a global prompt (to enforce consistency) and local prompts (for fine-grained actioning and customization).

Local prompts are linked in a drag-and-drop conversation flow builder, where conversation paths can be orchestrated logically.

.png)

The result: AI Agents that not only perform at enterprise scale, but also align with your business logic while matching the depth, breadth, and complexity of real, non-linear conversations.

Multi-State AI Agents unlock control and scalability over conversations that are too complex for single-state agents.

A single global prompt can take an agent a long way.

It can provide persona and role guidance for the agent (i.e. who the agent is and what their goals are), tone and style, guardrails, and how to handle certain questions and customer objections.

It’s effective at covering the predictable bases (i.e. the 70-80% of straightforward cases across lead qualification, scheduling, and inbound support calls).

There’s only so much that can be packed into a single prompt, however.

As soon as conversations become multi-turn and multi-decision, they require deeper, more structured prompting, and more dynamic logic informing how the AI Agent should act (based on the context of every turn).

LLMs generate responses by weighing all tokens in the conversation history.

Each conversation turn adds new information to the context window (an AI agent’s short term memory). As that window grows, the AI Agent continues to weigh all of it.

The problem: LLMs don’t have a built-in way to logically structure and parse tokens in a way that aligns with your customers and desired outcomes.

The result: Unpredictable behavior and performance. Your AI Agent’s ability to consistently and precisely follow directions weakens the longer and more complex the conversation and prompt become.

From a prompting perspective, relying on a single giant prompt simply doesn’t scale. Massive prompts are:

A single prompt isn’t equipped to guide decision-making across complex flows. As conversations unfold, you’re asking the model to somehow know:

Multi-State AI Agents help make sure your prompts don’t become a liability.

Multi-state allows you to make each turn, each decision, its own state, therefore decreasing the agent’s context window, leading to reliable performance at scale. You can set clearer guidance for:

It’s simple in nature but hard in practice: Multi-state breaks your AI Agent build down into more parts, leaving less decisions for your AI Agent to make per every conversation turn. This makes them more likely to deliver personalized, sharp, business-aligned responses, even during robust, multi-condition conversation flows.

Regal’s Multi-State Agent Builder helps you control performance at scale by letting you manage a global prompt and state-level prompts separately.

That means you control who the agent is in a global prompt, and in a series of separate mini-prompts, instruct how they should act per individual steps in a conversation flow.

You can isolate every turn only to the script, knowledge base, voice, model, and custom variables the AI needs to complete that exact turn.

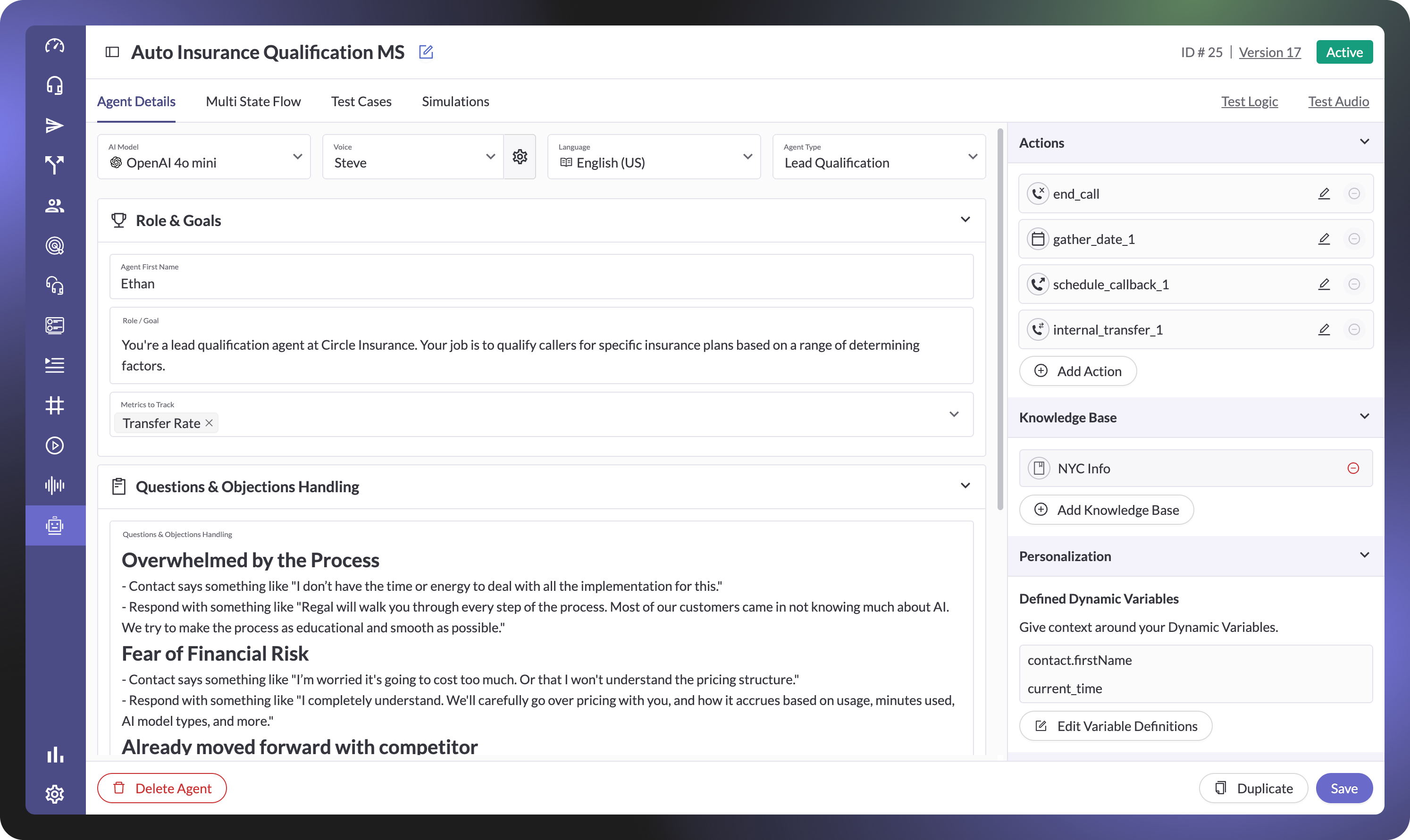

As you would in a single-state setting, in Agent Details, you’ll define the agent’s:

You’ll also set general data retrieval pathways and on-call settings (fallback voices, voicemail settings, Custom Analysis)—here you can add custom actions and knowledge bases that would carry across all conversation paths.

The global prompt impacts every interaction the agent has (unless overridden by state-level prompts, which we’ll touch on below).

Consider this the guiding principle of who your agent is and the style in which they interact.

When you move to the Conversation Flow tab, you can orchestrate the AI Agent’s decision-making patterns based on a real, visual conversational flow.

.png)

This separation of global and state-level prompting minimizes the noise around decision-making for the LLM. Per every individual turn in the conversation flow, it gives you precise control over:

With more points of control comes more points to customize interactions with customers. State-level nodes allow you to define RAG pathways, what custom variables to capture (to use later in the flow), and multi-layered logic that determines what happens next for the contact.

This layered prompting drives:

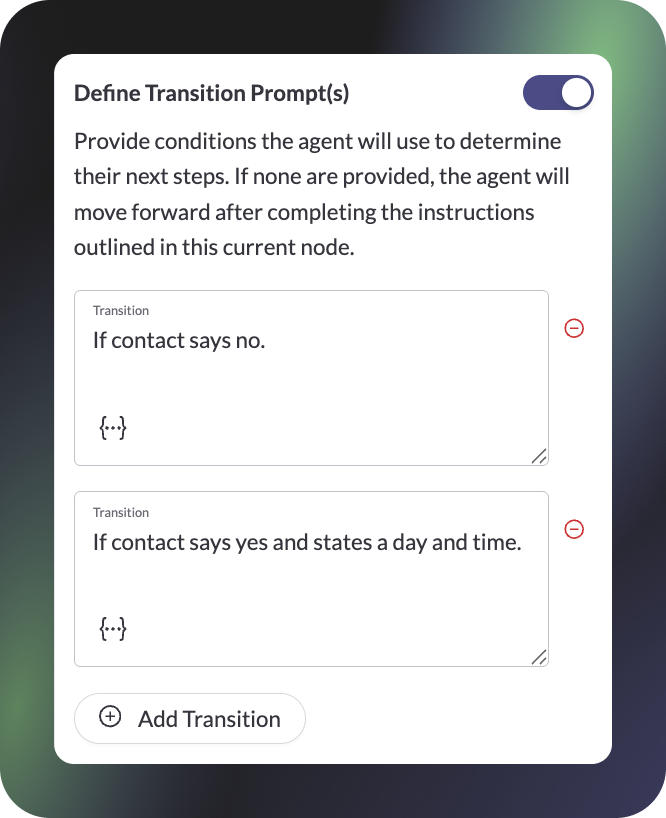

Transition Prompts are used within Prompt and Action nodes to define what needs to happen to determine next steps (i.e. if the contact says “yes” do A, if the contact says “no” do B).

Let’s consider an industry with a complex lead qualification flow—employer health insurance.

For this flow, Prompt Nodes would be used to instruct the AI agent to greet the lead warmly, explain the objective of the call, ask if it’s a good time, and then either schedule a callback or carry on with qualification questions. Prompt Nodes would also be used at each step to instruct the AI how to ask and respond to each of the qualifying questions.

.png)

Transition prompts would be used throughout to guide the AI on how to react to different answers. For example, “is now a good time to talk?” Your transition prompt would define that “no” triggers a path to schedule a callback, and “yes” triggers the AI to continue on with qualification questions.

While qualifying the lead, the AI agent might have to solve for more complex math and reasoning (i.e. having to weigh the number of employees and projected new headcount across different plan options).

To address this, within the Prompt Node, you could switch from GPT 4.0 Mini (set in the global prompt) to GPT 4.0, which is better suited for more complex or open-ended reasoning. The agent will revert back to the global prompt after that turn is complete.

.png)

Prompt Nodes are important for driving the conversation forward in a manner that’s pleasant and productive.

The Action Nodes then make sure tangible (backend) actions are taken to turn those conversations into outcomes. For this flow, you’d use Action Nodes like:

.png)

Enterprises need agents that can handle the full breadth and depth of possible call paths. Multi-State is built for that non-linearity.

It gives you the structure to orchestrate dozens of branches, enforce checkpoints, and adapt dynamically to customer inputs, without overloading a single prompt or relying on the LLM to hold it all in memory.

And since you’re prompting on a more granular level, that means there’s more (scenarios, data points, objections, “if-then” conditions) you can acknowledge per conversation flow.

Consider the following support flow:

In cases like this, being able to link nodes in a non-linear manner is crucial. The agent can cleanly loop back to the beginning of the flow, or enter a new state to handle unrelated questions (without having to transfer).

Part of the challenge with DIY solutions is that this complexity can quickly become unmanageable if you’re only working in text or code.

That’s why Regal centralizes orchestration in an accessible drag-and-drop builder. All the underlying logic—global prompts, state-level overrides, multi-condition decisions, variable capture—is there, built into the aforementioned nodes.

The interface keeps it intuitive to design, adjust, and manage.

.png)

With the builder, you can:

Multi-State takes complexity that would otherwise be fragile and unmanageable and makes it structured, centralized, and accessible—in a way that translates to the customer-side as well.

Building complex, multi-branch flows is only part of the equation—you also need confidence that those flows will perform as intended. With Regal’s Test Logic and Test Audio functions, you can preview both the decision pathways and the end-user experience before deploying.

You can validate conversation logic to make sure your agent follows branches correctly, like actions invoked and transition reasons.

.png)

Test audio helps further test the conversational quality while also testing for voice quality—whether the AI delivers a natural, on-brand experience.

This way, you’ll spot potential drop-offs before customers ever pick up the phone—reducing risk, cutting iteration cycles, and ensuring your AI Agent is production-ready, no matter how complex the use case.

Enterprises don’t need AI agents that simply “sound good,” they need agents that can reliably perform in the messy, non-linear reality of real customer conversations.

For sophisticated conversations with branching logic, that requires more than a single prompt and more than patched-together tools. It requires a purpose-built way to design, orchestrate, and measure conversation flows on a path-by-path level.

With Regal’s Multi-State Agent Builder, enterprises get exactly that: a centralized platform where global rules, state-level prompts, orchestration, and reporting live together.

Multi-State is about giving you the structure and visibility needed to scale AI agents with confidence across any level of unique complexity.

Ready to start building? Schedule a demo with us.

Ready to see Regal in action?

Book a personalized demo.