September 2023 Releases

Anyone who’s spent time with a five-year-old knows the pattern: tell them what not to do and they'll do just that. Tell them what not to do along with what to do instead, and they might just listen. The same dynamic exists when you’re building and guiding an AI voice agent.

Just like that five-year-old who interprets "don't run in the house" as an invitation to speed-walk at Olympic pace, AI agents take your instructions quite literally. The difference is that — with the right prompting and guardrails — AI will actually listen. The same way a child would. And once you understand how to communicate with it effectively, you can build voice agents that sound natural, stay on task, and deliver consistently high-quality experiences. Of course, designing a dependable AI voice agent takes more than good intentions. It needs clear instructions, concrete examples, and guardrails shaped by thousands of hours building and refining voice agents in production and seeing what actually works (or doesn’t). In this article, we’ll share our learnings from the field — the prompting principles that have consistently held up in live customer conversations: how to guide tone, structure interactions, model good behavior, and give your agent the clarity it needs to sound natural, stay on track, and do what you meant all along.

Just as with a 5-Year-Old, the smallest ambiguity can send your AI voice agent in an unintended direction, which is why demonstrating your intended behavior is crucial to creating predictable logic and consistent performance. Negative instructions like “don’t be rude” or “don’t interrupt” leave too much room for interpretation, and the agent fills that gap with whatever logic it can find. Instead, it performs best when you describe exactly what good behavior looks like.

Bad instruction: "Don't be rude to customers."

What the AI hears: "Rude? How do we define rude? Should I avoid directness? Or soften every response? Time to improvise!"

Better instruction: "Always greet customers warmly by name. When delivering disappointing news, start with empathy: 'I understand this is frustrating. Here's what I can do to help.'"

Just like with kids, vague directives lead to creative interpretations you didn't intend. The solution is the same in both cases: be specific, be concrete, and show exactly what “good” looks like. When you paint the picture, your AI voice agent can follow it — predictably, consistently, and without improvising its own version of the rules.

Five-year-olds don’t learn from abstract rules — they learn by example. "Be gentle with the cat" becomes crystal clear when you show them how to pet it softly. AI voice agents follow the same pattern: the more concrete examples you provide, the more reliably they generalize the behavior you want.

High-level instructions like “handle objections professionally” sound clear to you, but to an AI voice agent they’re wide open to interpretation. Instead, give the agent actual examples of the tone, structure, and language you expect.

Instead of saying: "Handle objections professionally," give your agent an example like:

Customer: "This is too expensive."

Agent: "I understand budget is important. Many of our customers felt the same way initially. What they found is that the time saved pays for itself within the first month. Would you like to see a breakdown of the ROI?"

Examples work because they do two jobs at once:

Just like demonstrating the right way to pet the cat, examples show your agent how to act, not just what to avoid. The more specific and varied scenarios you provide, the better your agent will generalize to similar situations.

Tell a five-year-old, “Don’t touch that,” and you’ll watch their hand move toward it like it’s magnetized. AI voice agents aren’t much different. When you frame instructions around the behavior you don’t want, the agent still anchors on the very concept you were trying to avoid — hold times, interruptions, jargon — and may accidentally reproduce it.

Negative rules create ambiguity. Positive framing creates clarity.

Here's what happens with negative instructions like "Don't put customers on hold without asking", "Don't interrupt customers", and "Don't use jargon".

The AI focuses on the concept you mentioned — hold, interrupt, jargon — and might do exactly what you're trying to avoid.

Try positive framing instead:

"Always ask permission before placing someone on hold: 'May I put you on a brief hold while I look that up?'", "Let customers finish their complete thought before responding", "Use simple, conversational language like you're talking to a friend."

Positive framing works because it gives the agent a specific, repeatable action to follow. Just like asking a child to “walk, please” instead of “don’t run,” you’re directing the behavior you want, not the problem you’re trying to eliminate.

Remember playing pretend as a kid? Tell a five-year-old, "You're the doctor, and I'm the patient," and suddenly everything clicks. AI voice agents respond to persona cues the same way. This framework helps the AI make thousands of micro-decisions about tone, word choice, and approach without you having to specify every single scenario.

Rules alone can make your agent sound robotic or inconsistent. A persona gives it context, and context shapes instincts.

Rules-only approach:

“Be friendly. Stay professional. Explain things clearly.”

How the agent interprets it:

Friendly how? Professional to what degree? How simple is “simple”? Time to guess.

Persona-driven approach:

"You're a knowledgeable, friendly insurance advisor who genuinely cares about helping people protect what matters most. You're patient with questions, never pushy, and you explain complex topics using everyday analogies. Think of yourself as a trusted neighbor who happens to know a lot about insurance."

A persona anchors tone, intent, and conversational style. It helps the AI decide how to break bad news, how much detail to offer, when to ask clarifying questions, and how to maintain rapport — all without relying on rigid scripts. Just like a child slipping into character, the agent instantly has a mental model to operate from. And that model becomes the foundation for consistent, natural-sounding interactions across thousands of calls.

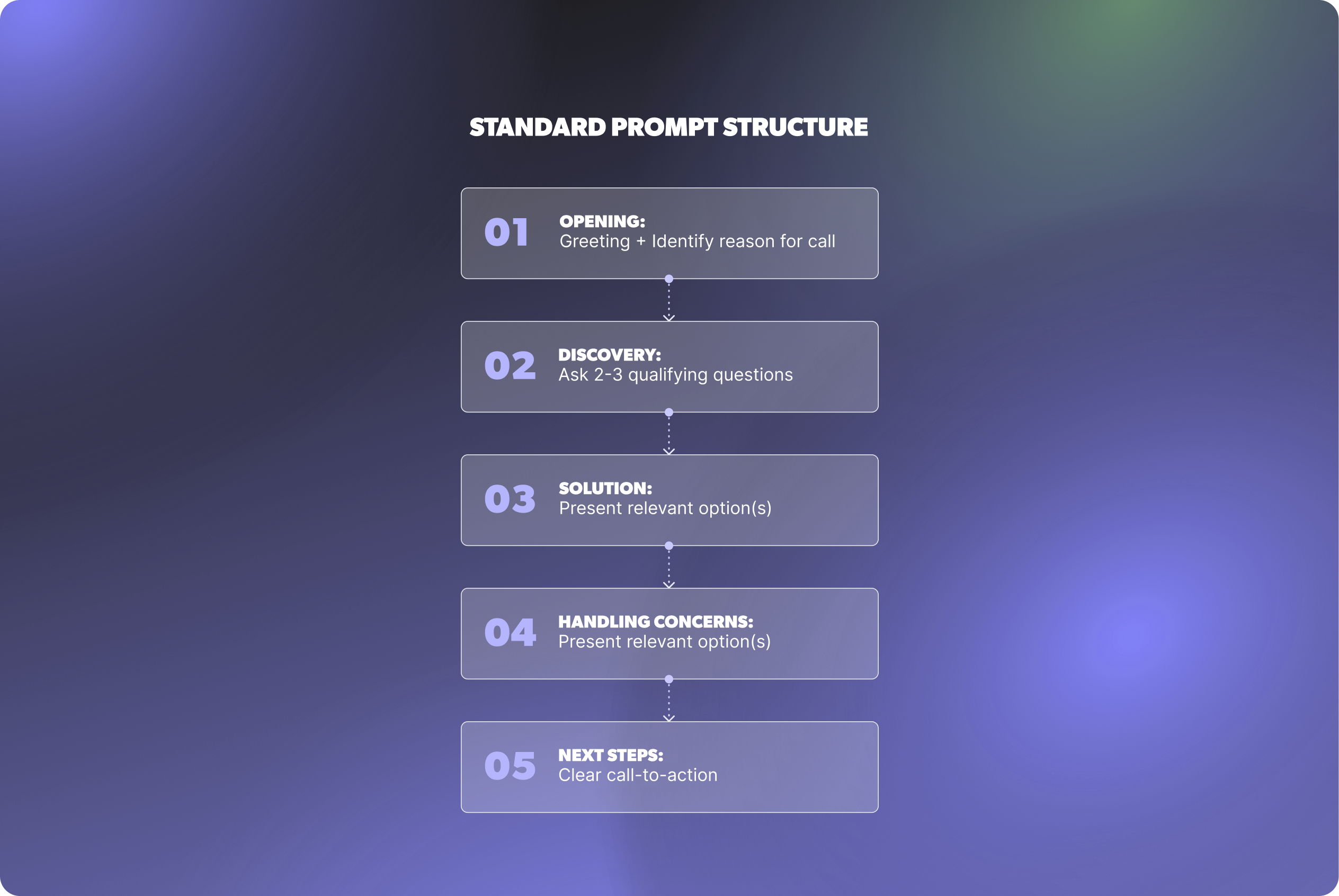

Paradoxically, kids thrive with structure. "First we clean up toys, then we have snack time" works better than "go clean your room... at some point." AI voice agents work the same way. Without structure, conversations wander or collapse into awkward transitions. With it, the agent suddenly knows where it is in the flow, what comes next, and how to move the interaction forward.

For AI voice agents, create clear conversational flows:

Within this structure, your agent can be flexible and sound natural. Without it, conversations meander or feel unnatural. Just like a routine helps a kid stay grounded, structure gives your voice agent room to be conversational — without losing the plot.

A wise five-year-old knows when to get a grown-up. Your AI agent needs the same wisdom.Even the best-designed agent shouldn’t power through every scenario — it should recognize when it’s out of bounds and escalate gracefully.

Define clear, simple escalation triggers like:

Knowing limitations is a superpower, not a weakness. It’s what makes your agent dependable, safe, and trustworthy in production.

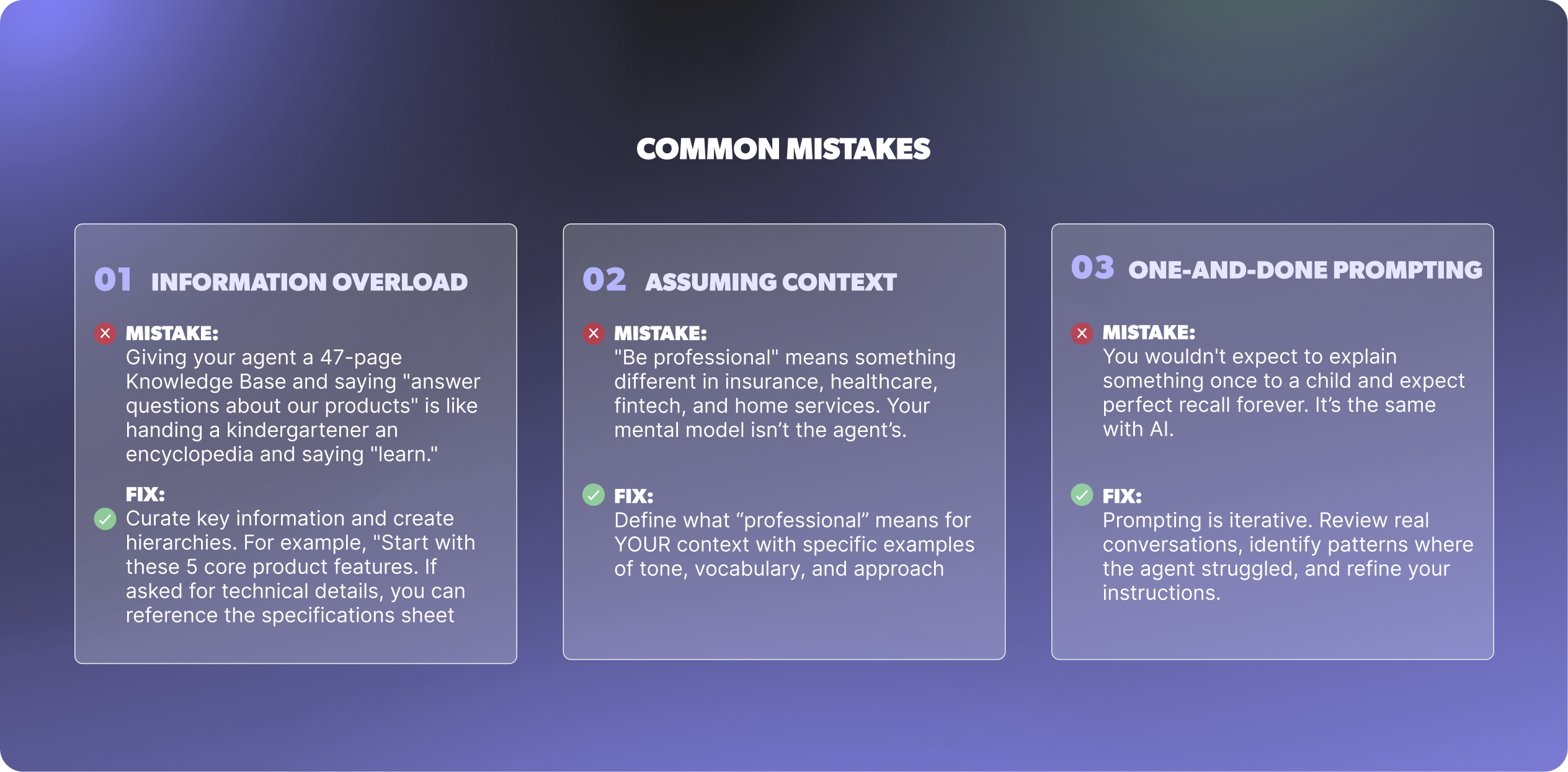

Even with the best intentions, it’s easy to give an AI voice agent instructions that sound clear to you but land very differently for the LLM. These are the pitfalls we see most often — and the fixes that consistently help.

These mistakes happen to everyone, but recognizing them early makes your agent more consistent and far easier to manage in production.

Here's what makes voice agents at Regal AI particularly challenging and interesting: they're having real-time conversations. Unlike with chatbots where customers can think and reread through responses, voice is immediate and linear.

That’s why conversational behaviors matter. A few simple patterns go a long way toward keeping interactions natural and grounded:

These behaviors aren’t fluff — they’re the signposts that make voice interactions feel human and keep the conversation moving in the right direction. Think of them as the social skills you teach a kid so they don’t accidentally derail a playdate. Add the right guardrails, and the agent sounds thoughtful rather than mechanical.

A five-year-old will never be more patient than a well-rested adult. But an AI agent? It's always patient, never has a bad day, never gets frustrated, and treats the 500th caller with the same enthusiasm as the first.

This consistency is where AI quietly outperforms even top human agents. It delivers empathy on command, follows process perfectly, and keeps its cool no matter how tough the conversation gets. When you prompt effectively, you're not just avoiding mistakes – you're creating consistently delightful experiences that can scale infinitely.

Prompting an AI voice agent requires the same skills as explaining the world to a curious, literal-minded five-year-old: patience, specificity, examples, positive framing, and lots of iteration.

But here's the best part: once you nail those prompts, your AI agent will consistently deliver those instructions at scale, following best practices in every interaction. Your five-year-old will eventually grow up and stop listening. Your AI agent just gets better at listening the more you teach it.

So embrace your inner kindergarten teacher. Be specific. Use examples. Show them what good looks like. Ready to put these best practices to use? At Regal, we pair you with a Forward Deployed Engineer to expertly guide you through every step of the process, from designing prompts to deploying your agent and refining with real conversations. Schedule a demo with us to get started.

Ready to see Regal in action?

Book a personalized demo.