September 2023 Releases

As contact centers move beyond simple AI Voice Agent use cases like capturing a callback request or asking a few qualification questions, and start deploying AI Voice Agents to fully own more complex interactions – like coordinating roadside assistance or guiding a customer through a full booking flow – a new challenge is emerging: most APIs were never designed with AI agents in mind.

They were built for deterministic systems: frontends, backends, and workflows that follow strict patterns. But AI Voice Agents aren’t browsers; they’re collaborators that are probabilistic, interpretive, and conversational. They need clear, structured, and contextual access to information and actions, in order to function effectively in real-time conversations. Trying to shoehorn old APIs into LLM-driven workflows can lead to poor agent performance, unnecessary complexity, and brittle integrations. The better your APIs help AI agents reason, explain, and act, the better your customer experience will be.

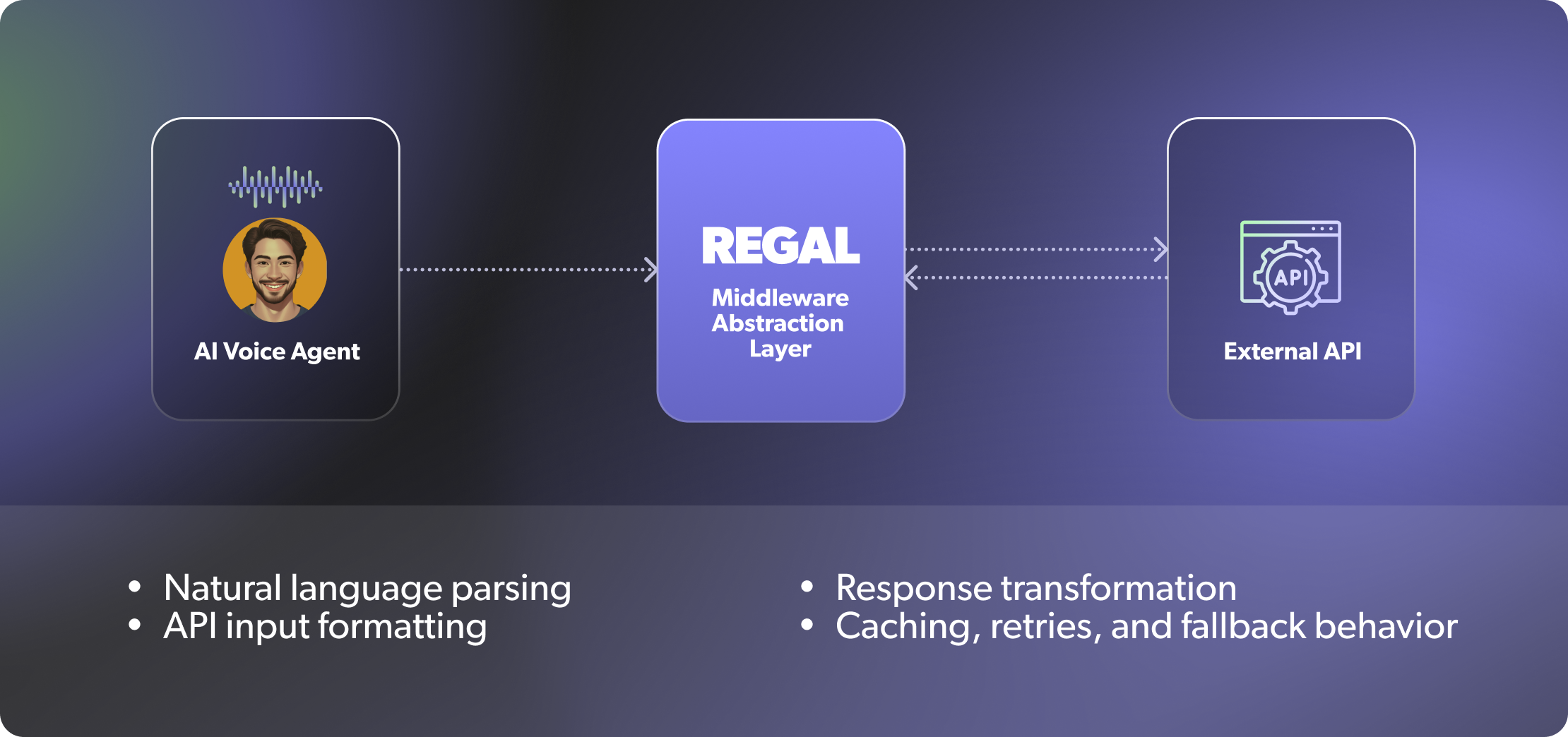

At Regal, we address this challenge by architecting a middleware abstraction layer between AI agents and customer APIs. This layer translates natural language intents into structured, API-compliant requests, batches API calls when needed for reduced latency, and at times distills verbose raw responses into only what’s relevant for AI agents and enriches raw responses with context-aware summaries, labels, and semantic metadata tailored for conversational interpretation. This lets legacy APIs remain intact while giving AI Voice Agents a clean, usable interface.

Here’s what we’ve learned from building this translation layer to retrofit traditional APIs into AI agent stacks, and how to design future APIs to actually make your AI agents better. To bring our learning to life, we’ll use the example of an AI Voice Agent that helps customers discover, and then book for appointments with, medical providers.

Traditional APIs expect perfectly structured input. But AI Voice Agents deal with vague phrases like "next Friday afternoon" or "Dr. Sanchez" or "my usual location." An AI Voice Agent-ready API handles the normalization, not the agent. On the backend, use libraries like Chrono or Duckling for natural language date parsing, fuzzy location matching based on customer zip code, or named entity resolution to map "Dr. Sanchez" to a provider ID.

Traditional API:

POST /appointments

{

"provider_id": "8932",

"time": "2025-08-02T14:00:00",

"location_id": "brooklyn_1"

}

AI Agent-ready API:

POST /appointments

{

"provider": "Dr. Sanchez",

"time": "next Friday afternoon",

"location": "nearest clinic"

}

{

"resolved_time": "2025-08-02T14:00:00",

"raw_input": "next Friday afternoon"

}

AI agents reason better when your API gives them full context – not just what a value is, but what it means and how it should be used in conversation. LLMs don’t operate on raw IDs or numeric codes alone. Imagine how many times a number or letter occurs in its context? Even if the ID is a UUID, it's unreasonable to expect the LLM to parse and match identifiers to semantic representations while staying on task. LLMs make decisions based on relationships, semantics, and intent. Providing clear labels and explanatory metadata allows agents to understand, summarize, and respond accurately without guessing or hallucinating.

Traditional API:

{

"status": "C",

"priority": 2

}

AI Agent-ready API:

{

"status": {

"code": "C",

"label": "Cancelled",

"explanation": "This appointment was cancelled by the provider."

},

"priority": {

"level": 2,

"label": "Urgent",

"description": "Requires attention within 24 hours."

}

}

Traditional APIs often return everything a UI might need, such as: long doctor bios, image urls, full calendars, exhaustive list of insurance plans accepted. This makes sense for frontends that humans can scroll and read on their own time, but not in a live AI Voice Agent conversation where agents operate within token limits and care about relevance and latency. Succinct API responses reduce context overload, avoid hallucinations, and help the agent stay focused.

Traditional API:

GET /therapy-providers?specialty=anxiety

{

"providers": [

{

"id": "p_123",

"name": "Dr. Elena Martinez",

"bio": "Elena Martinez, PhD, is a licensed clinical psychologist with over 20 years of experience in CBT, DBT, and trauma-informed care. She earned her doctorate at Stanford University and completed her residency at Mount Sinai. Elena specializes in anxiety, panic disorders, and adolescent therapy...",

"short_bio": "20+ years in CBT, specializes in anxiety and trauma.",

"specialties": ["anxiety", "trauma", "adolescents"],

"insurance_accepted": [

{ "name": "Aetna", "plan_codes": [...] },

{ "name": "Blue Cross", "plan_codes": [...] },

{ "name": "UnitedHealthcare", "plan_codes": [...] },

{ "name": "Cigna", "plan_codes": [...] }

],

"availability": [

{ "date": "2025-07-28", "slots": ["09:00", "14:00", "16:30"] },

{ "date": "2025-07-29", "slots": ["11:00", "13:30", "17:00"] },

// ... more days ...

],

"ratings": 4.9,

"location": "Brooklyn, NY",

"video_sessions": true,

"languages_spoken": ["English", "Spanish"],

"internal_tags": ["tier_1", "promoted"]

},

...

]

}

}

AI Agent-ready API:

GET /therapy-providers/summary?location=Brooklyn&specialty=anxiety&insurance=Cigna&type=virtual

{

"providers": [

{

"name": "Dr. Elena Martinez",

"gender": "Female",

"title": "Licensed Clinical Psychologist",

"summary": "20+ years in CBT, DBT, and trauma-informed care. Specializes in anxiety, panic disorders, and adolescent therapy.",

"ratings": 4.9,

"accepts_customer_insurance": true,

"next_available_slot": "Monday - July 28th, 2025, at 2PM Eastern."

}

]

}

Descriptive error messages with hints of what to do next helps agents retry or redirect naturally.

Traditional API:

400 Bad Request

AI Agent-ready API:

{

"error": "Invalid date format",

"hint": "Try 'next Monday' or '2025-08-01'"

}AI agents work better with fewer round trips. Each additional API call introduces latency and raises the risk of failure when the agent is trying to hold a live voice conversation. Unlike traditional systems that can sequence multiple calls without user-facing consequences, agents must maintain conversational coherence and flow. Bundling relevant context into a single endpoint helps the agent reason across multiple dimensions at once, such as user status, plan type, and appointment availability, without needing to stop and fetch information mid-dialogue.

Traditional API:

Requires 4 successive calls:

GET /customer

GET /appointments?customer_id=...

GET /cases?customer_id=...

GET /plan?customer_id=...

AI Agent-ready API:

GET /customer-summary?customer_id=abc123

{

"name": "Alex Smith",

"plan_status": "active",

"next_appointment": "Saturday, August 2nd, 2025 at 3PM Central.",

"number_of_open_cases": 1

}

AI Voice Agents operate in real-time, spoken conversations – every pause or delay is felt by the user. While a traditional web UI can tolerate 500ms+ response times, AI agents should not. A slow API response translates directly into awkward silences or interruptions. Critical endpoints should respond in under 200 milliseconds to maintain a natural, fluid exchange. It’s not just about averages, it’s about distribution. If your endpoint’s median latency is 200ms but the 95th percentile hits 5 seconds, that means half your users might be waiting anywhere from 200ms to 5s for a response. That’s a killer for conversational flow. Aim for a p95 latency under 200ms to keep interactions smooth.

Providing examples in your API docs or Postman collections helps with prompting, grounding, and debugging. When developers can see how natural-language inputs map to structured payloads, and what responses are expected, they can craft more precise prompts and diagnose issues faster. It also helps align cross-functional teams.

AI Agent-ready API Docs:

Building successful AI Voice Agents requires product, engineering, contact center teams and AI agent builders to all be on the same page. Mapping APIs to the call flow may be time-consuming, but it’s essential for creating robust and reliable agent behaviors.

MCP (Model Context Protocol) is an open standard that defines how AI agents discover and interact with external tools, APIs, and data sources in a consistent, structured way. Think of it as a universal adapter: it tells agents what tools exist, how to use them, and what data or actions each one supports.

As customers build more AI agents, managing tool access and updates becomes a challenge. MCP helps by centralizing all tool schemas and descriptions on a single server. Instead of updating each agent individually when an API changes, you update your MCP server, and all connected agents automatically benefit.

That said, MCP doesn’t replace APIs – it relies on them. Your APIs still do the heavy lifting: business logic, validation, data access, and operations. MCP just standardizes how agents discover and access those APIs. It helps agents.

The reality today is that most APIs are not designed for AI agents, and it will take years for engineering teams to retrofit them accordingly. That’s one of the reasons why contact center teams partner with Regal to accelerate their AI agent adoption curve. Regal's "Build With" approach doesn't just deliver the tools to build AI agents, but also the expertise. Our AI Forward Deployed Engineers work side-by-side with your team – deeply understanding your business, goals, workflows, and current systems, and providing the bridge to AI agents that don’t just get deployed, but actually wow your customers and achieve business results.

Ready to see Regal in action?

Book a personalized demo.