September 2023 Releases

As AI voice agents grow in capability and complexity, so does the challenge of managing what they know, how they say it, and when they say it.

The three primary methods for context engineering a Regal AI Voice agent are:

Each has distinct advantages, and misusing either can lead to performance degradation, hallucinations, or bloated prompts that are hard to maintain.

We'll walk through how to decide what to keep in the prompt and what to shift to a Regal Knowledge Base or custom action, with examples and technical considerations.

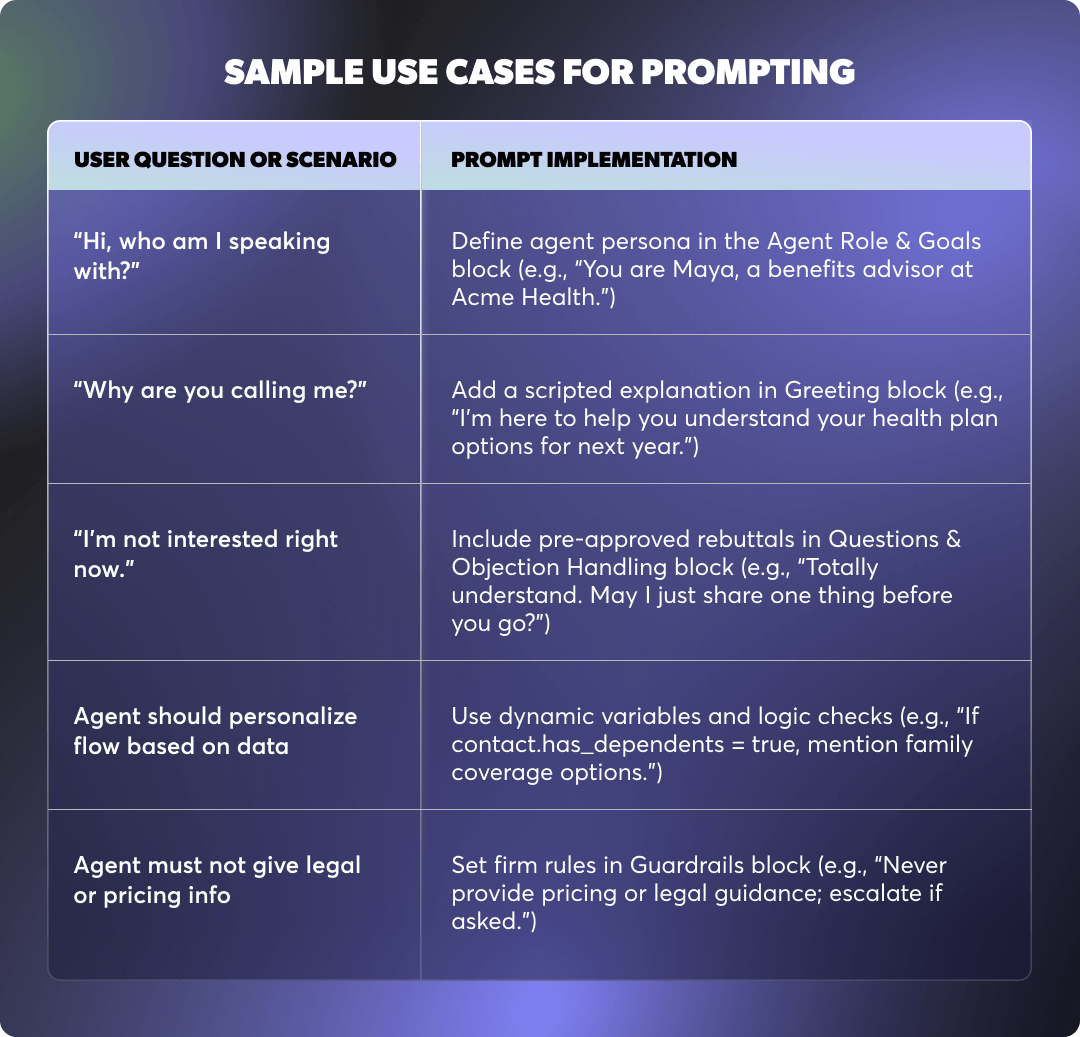

The prompt defines core agent behavior, tone, logic, and task flow.

It's evaluated at the start of the conversation and guides all interactions. Prompt content should be compact, essential, and mostly static.

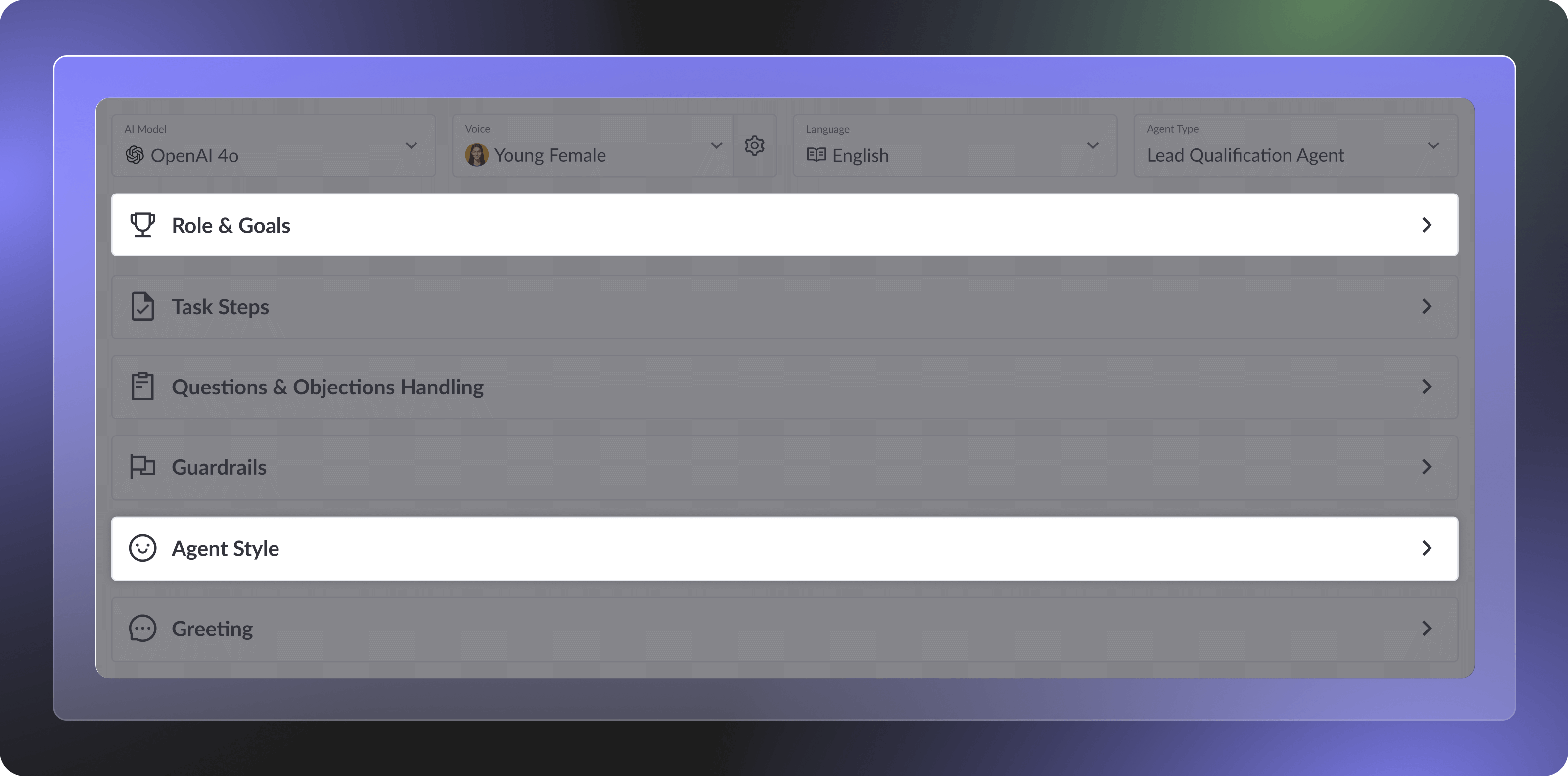

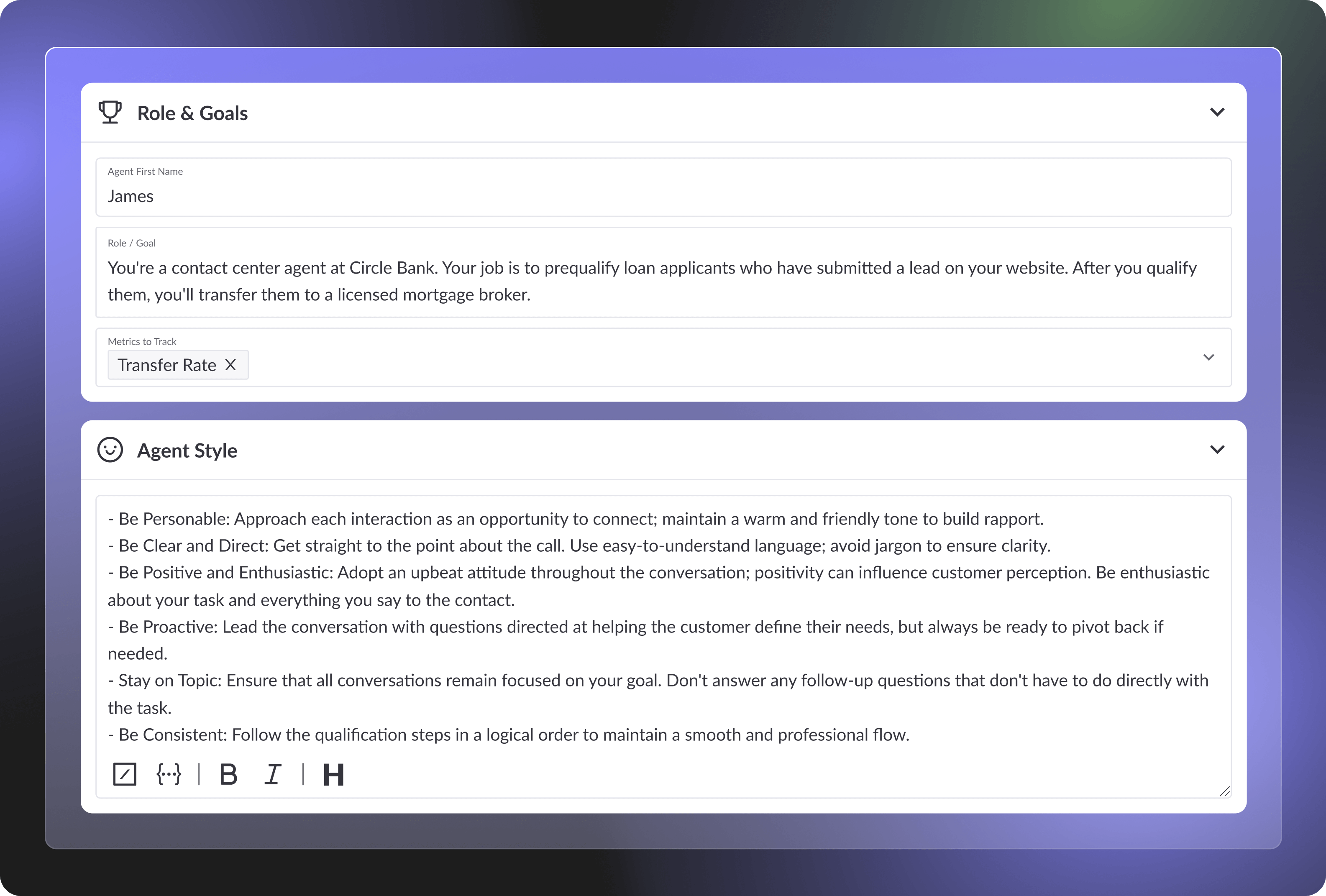

You can do this in the “Role & Goals” and “Agent Style” dropdowns in Regal:

Specifically:

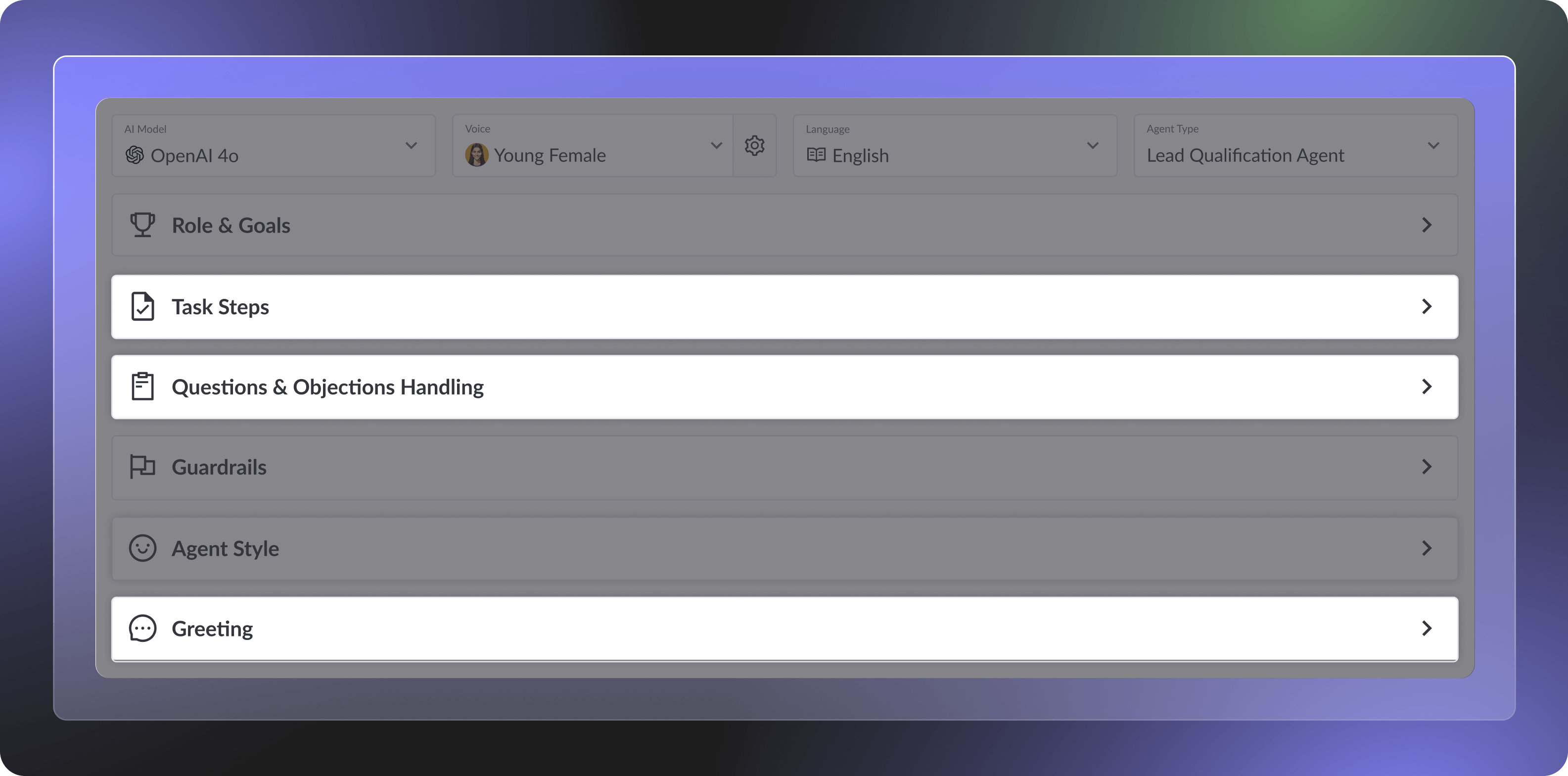

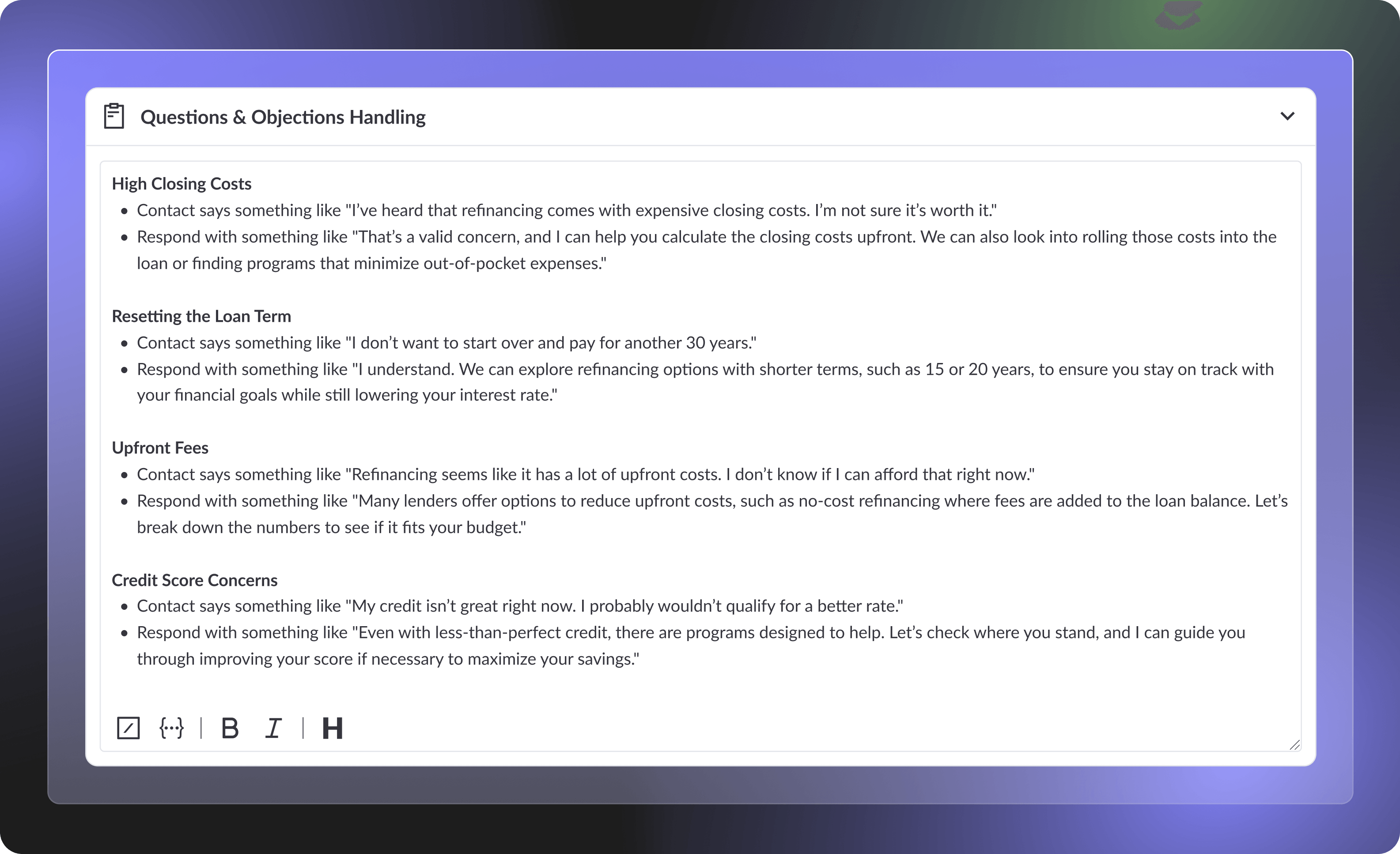

For this, you'll use the “Task Steps,” "Questions & Objections Handling," and "Greeting" dropdowns:

To edit things like:

For example:

%20(1).png)

For common objections that require fast, consistent phrasing. Ideal for when answers are short and stable over time.

Defining how and when to invoke custom actions (e.g. a pricing lookup or scheduling call).

%20(1).png)

The prompt is the deterministic core of your agent. It governs tone, behavior, sequencing, decision-making, and when to invoke structured logic.

Because it’s always loaded and evaluated across the full conversation, the prompt is the right place for anything that needs to consistently shape how the agent acts. In contrast, RAG content is only referenced conditionally (when retrieval is triggered by context).

That makes the prompt your agent’s brain, not its encyclopedia. It’s where you define how the agent thinks and behaves, and should focus on control (i.e. logic, flow, and guardrails).

It's not for storing large amounts of reference material. Including too much static or reference material bloats the context window, risks crowding out critical instructions, and can degrade reliability.

A lean, well-structured prompt keeps your agent fast, predictable, and easier to maintain.

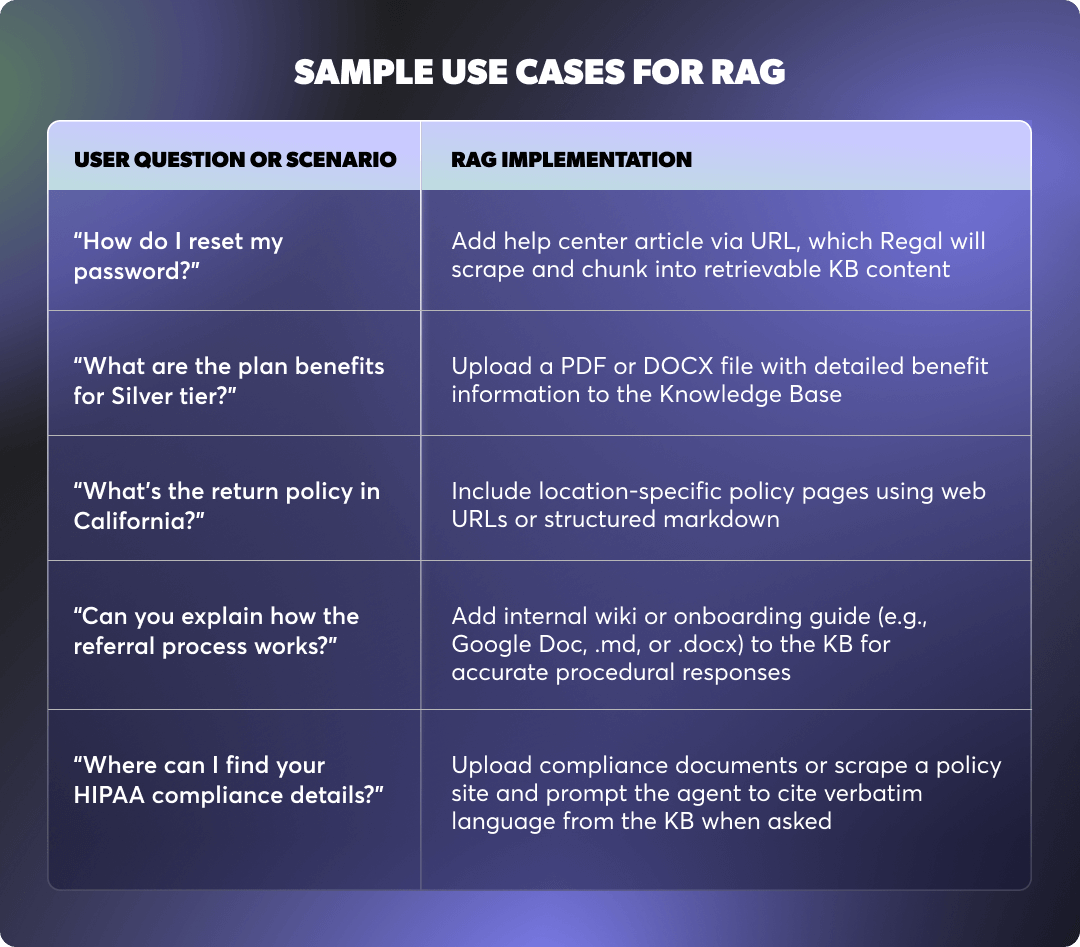

Regal’s Knowledge Bases allow you to ingest long, structured content like support documentation or policy language and access it dynamically during the conversation through RAG.

The agent retrieves the relevant information based on real-time context, keeping prompts lean and responses accurate.

See how you can create a Knowledge Base and connect it to an AI Agent.

RAG allows agents to scale their knowledge without compromising speed or behavior.

It decouples factual content from behavior logic, enabling you to update policies, support docs, and how-to material without touching the prompt.

This separation keeps the agent’s core logic stable while allowing its knowledge to evolve.

Regal’s retrieval engine automatically indexes long-form content and surfaces relevant sections based on the RAG’s configured description and real-time conversational context.

RAG works best for unstructured or narrative information, rather than structured data, as it cannot compute values, perform sorting, or reliably extract from things like pricing tables or state-based rules.

%20(1).png)

And because retrieval depends on context, it introduces non-determinism, meaning responses may vary slightly across otherwise similar conversations.

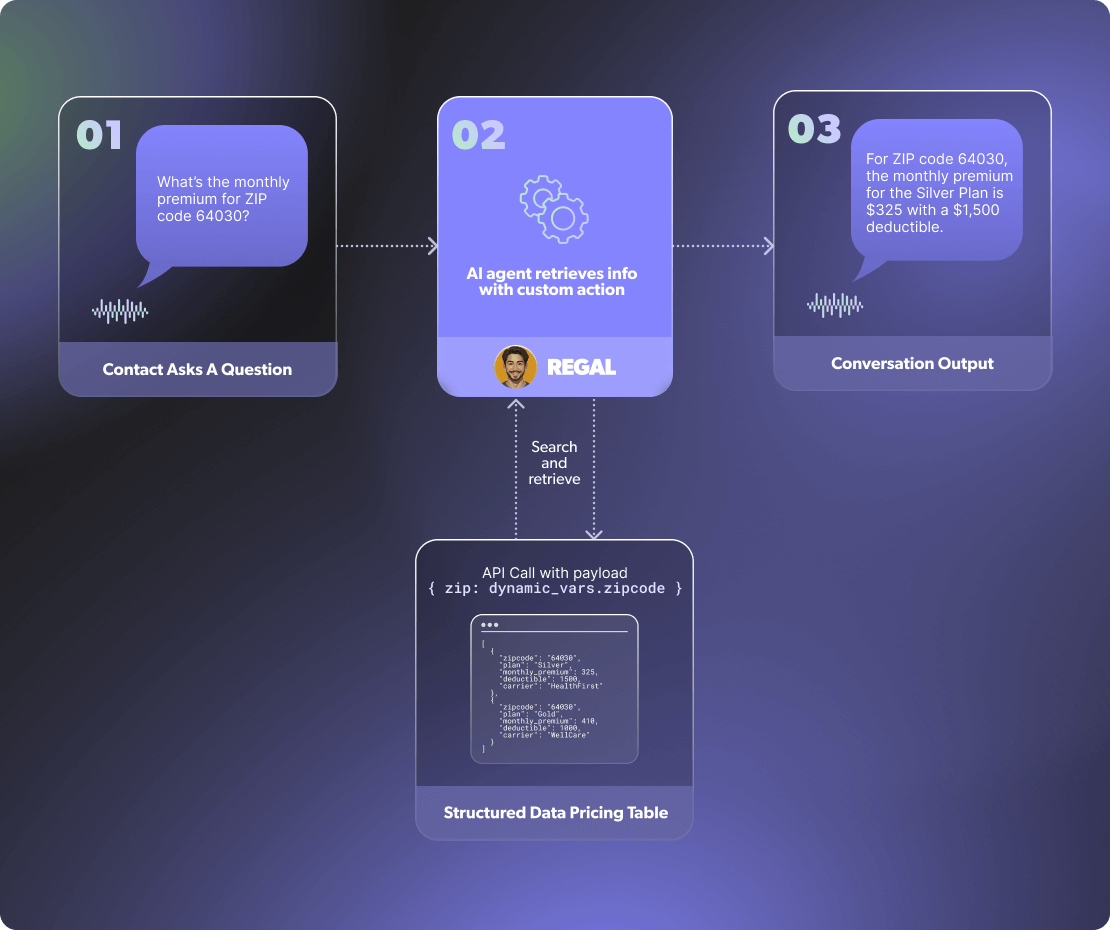

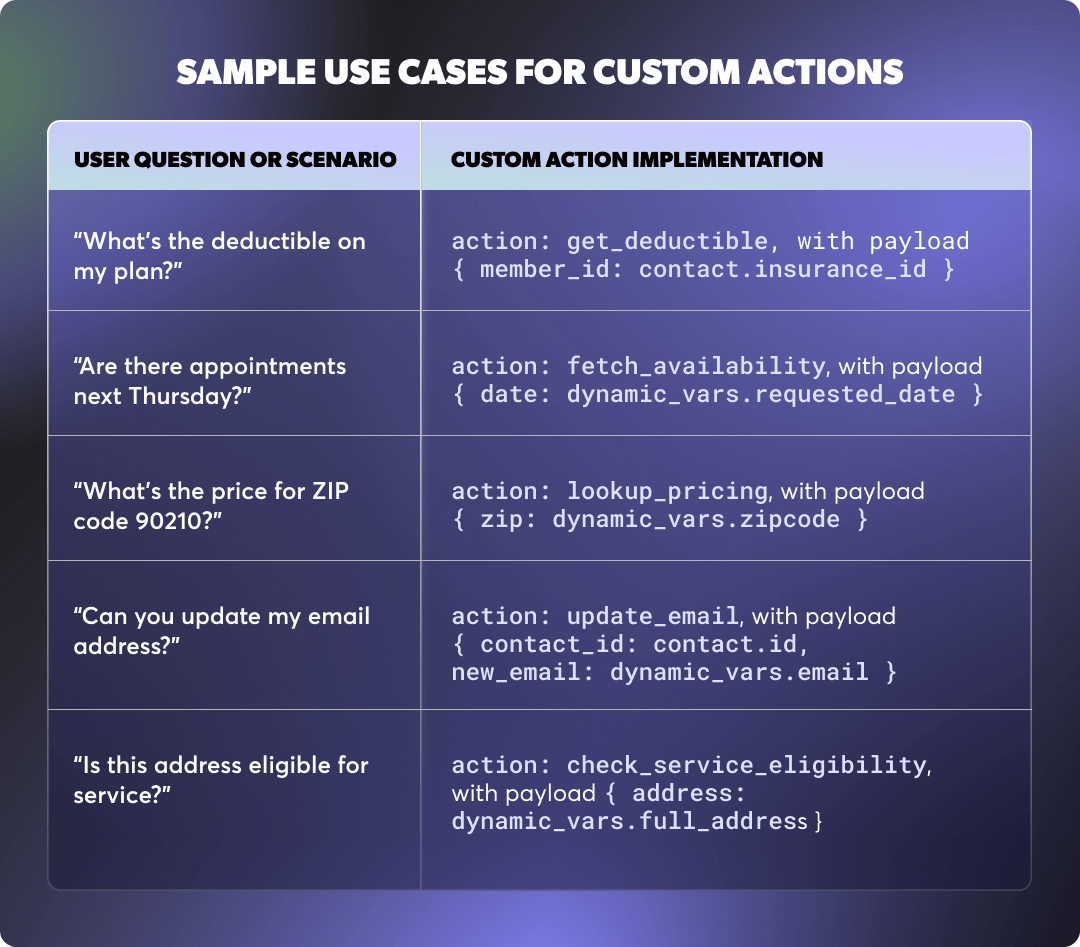

Custom Actions are how Regal AI agents integrate with APIs or structured data sources. They allow the agent to perform precise lookups, execute business logic, or fetch real-time information from external systems.

This is the best choice when the agent must query structured data, perform conditional logic, or execute real-time transactions.

While prompts are ideal for orchestration and RAG is great for unstructured reference material, custom actions are essential when the agent must deliver precise, dynamic outputs.

Only Custom Actions can fetch exact values (like account balances or appointment slots), evaluate multi-condition rules, or write back to external systems without risking ambiguity.

> See a detailed rundown of how to create a custom action for your AI Agent.

Custom Actions provide a deterministic, API-driven bridge between your agent and the systems it needs to interact with. They’re purpose-built for structured logic and data retrieval—tasks that cannot be reliably handled by prompts or RAG.

So on call, when a contact asks a question, the AI Agent retrieves info from an external system with the custom action, and then uses that information to respond to the question.

RAG can't perform logic or precision extraction, and prompts can't dynamically access live data.

Custom actions guarantee consistency, enable branching logic, and return exactly the values your agent needs in order to respond accurately and maintain compliance.

%20(1).png)

Context engineering an AI agent requires more than just good content, it requires knowing when and how your agent should reference that content.

Prompts, knowledge bases, and custom actions each serve a different role, and using them interchangeably can lead to bloated logic and systems that are hard to manage and update.

To build agents that scale reliably:

Clear boundaries between these layers make your agents easier to test, maintain, and improve.

Ready to see Regal in action?

Book a personalized demo.