September 2023 Releases

Conversational AI agents are one of the fastest-growing applications of LLMs, powering enterprise CX interactions across voice, SMS, chat, and email. As these agents become more capable, handling multi-turn conversations and complex actions, the potential business impact continues to grow.

But so does the risk associated with every deployment. Manual tests are no longer enough to cover the breadth of handling scenarios or guarantee consistency at scale with non-determinist responses. Any poor handling case that slips in testing can quickly multiply into large-scale performance issues.

Managing this risk requires a new standard of testing—one that is both robust, varied, and rooted in best practices designed specifically for AI agents.

In this guide, we’ll break down how to test AI Agents in Regal.

You’ll learn what to test (across agent logic, voice, and telephony), when to run tests, how to configure simulation-based test suites, and how Regal helps you automate testing to scale and iterate faster from development to production.

Not all AI Voice Agents are built the same. Testing a conversational voice agent is fundamentally different from testing an inbox automation or a one-off form-filler, because the agent is more deeply rooted in your telephony and tech stack.

With a complex multi-turn voice agent, testing needs to incorporate each of these layers:

Each of these layers introduces unique challenges—each critical to get right to ensure consistent performance from your AI Agents from day one.

The core of any AI Agent is its workflow logic, context, and actions—how the agent understands and retrieves context, handles objections, and decides whether to call a function or escalate.

In Regal, this is maintained via the agent’s prompt (or prompts for multi-state) and function calls (i.e. task and action instructions, objection handling, and style).

This is where most of your testing iteration should come. By preventing your agent from hallucinating, skipping steps, and misinterpreting objections and questions, they’ll feel truly human, while being more pleasant and helpful for every caller.

Why this matters: Logic drives outcomes. Testing core logic is how you make sure you’re capturing all of the value from a conversation. If the AI is missing on logic and decisioning, then your voice settings and telephony either won’t come into play, or simply won’t matter.

Example: A lead says, “My monthly payments are killing me,” but the agent doesn’t recognize it as a refinancing opportunity because it was trained only on explicit phrasing like “I want to refinance.” Testing core logic enables you to catch and address edge cases like this before launch, expanding coverage and ensuring more conversations lead to qualified outcomes from the start.

On top of logic is the voice layer. This includes voice selections, TTS (text-to-speech) settings, and support for different accents or languages.

Unlike text channels, voice must contend with latency, recognition errors, and background noise. Even pronunciation can be tricky—brand names or uncommon words often come out wrong unless explicitly prompted.

In Regal, you control voice selection, speed, responsiveness, interruption sensitivity, and pronunciation through builder settings and prompt edits.

By using the Test Audio function, you can catch potential voice or phrasing regressions early, so your agent consistently sounds professional and easy to understand.

Why this matters: Voice is how your logic and branded messaging is delivered. Testing ensures your agent sounds fluent and responsive, reinforcing trust for your specific customer base and preventing friction that might cause a caller to hang up.

Example: A healthcare intake agent for eldercare may need to speak more slowly and at a higher volume, with a calm, empathetic tone, reduced responsiveness, and no artificial background noise. These voice settings not only set the right tone for elderly patients, who may be hard of hearing or need more time to respond, but also have a functional impact by improving clarity and driving better call outcomes.

.png)

Finally, there’s the telephony layer—something unique to voice agents.

They’re part of a broader contact center infrastructure that extends beyond the agent itself. That includes testing SIP integrations for reliable connectivity with external systems, validating how calls are routed and transferred, and confirming that call details and other brand or customer context is passed between systems.

Calls don’t happen in isolation: an AI agent often needs to transfer to a human when issues get complex. If the transfer isn’t tested, customers may get dropped, or human agents may receive a call with no context. Proper testing ensures hand-offs are seamless, with conversation transcripts and caller details passed through, so the customer experience doesn’t break down at the most critical moment.

Why this matters: Telephony is the connective tissue between your AI agent and the rest of your CX stack. Even if logic and voice are flawless, a broken call transfer or missing context can erase trust instantly. Testing telephony flows ensures continuity, so customers don’t feel like they’re starting over when handed off, and human agents are empowered with the right information to resolve the issue quickly.

Example: A customer calls about a loan payoff but the AI agent recognizes they need to speak with a human due to policy constraints. If the transfer drops the call or fails to pass along the caller’s account number and transcript, the customer will have to repeat themselves, increasing frustration and abandonment risk. Testing telephony flows ensures the human agent receives the transcript and account context automatically, creating a seamless, professional experience that builds trust instead of eroding it.

By understanding and testing each of these layers—logic, voice, and telephony—businesses can ensure that their voice AI agent isn’t just technically functional, but actually reliable in real-world, high-stakes scenarios. Logic, voice, and telephony each carry unique risks, and testing must cover all three to ensure real-world reliability.

Testing a conversational AI agent isn’t one-size-fits-all. The right approach depends on whether you’re still building the agent, or ready to deploy it at scale.

Manual testing is indispensable in the early development phase. Running one-off test calls or text conversations helps teams prototype flows, debug responses, and capture subjective nuances like tone, voice quality, and pronunciation. Regal makes it easy to monitor every step of the interaction via text or voice with action invocations and payloads directly in the manual testing interface.

.png)

But manual testing is slow, inconsistent, and doesn’t scale. As you make tweaks to your prompt and RAG, relying solely on manual testing means every new version requires retesting all flows from scratch. Even then, gaps are inevitable—manual tests can’t reflect the diversity of your customer personas or the non-deterministic nature of AI responses to give you confidence that the AI agent will behave predictably and consistently at scale.

That’s where automated testing comes in. Automated tests are the only way to confidently iterate on complex, multi-turn agents without exposing customers to risk. Yet many teams still skip them—assuming they’re too complex or costly. In reality, skipping automation slows iteration, magnifies errors in production, and erodes ROI.

This is why Regal offers both: a single-test feature in the builder (perfect for quick manual checks) and a simulation suite to run automated tests at scale. Manual tests help you build; automated tests help you scale—and Regal gives you both in one toolkit. [ Insert Content Links ]

The rule of thumb: run automated tests before every deployment or model update. This ensures regressions are caught early, release cycles stay short, and customer experience remains consistent. For contact centers where revenue and trust hinge on reliable interactions, skipping this step can be costly.

When defining the scenarios you want to test, full coverage means capturing all three of the following test types:

1. Multi-turn flows

Scenario: A customer texts: “Can I refinance my mortgage?”

Happy path: Agent correctly qualifies the lead, collects information, and schedules a call

Unhappy path: Customer objects (“I’m not sure I have time”), and the agent handles it gracefully by offering a call-back option.

Success criteria: Agent either schedules a call or captures follow-up intent without dropping the lead.

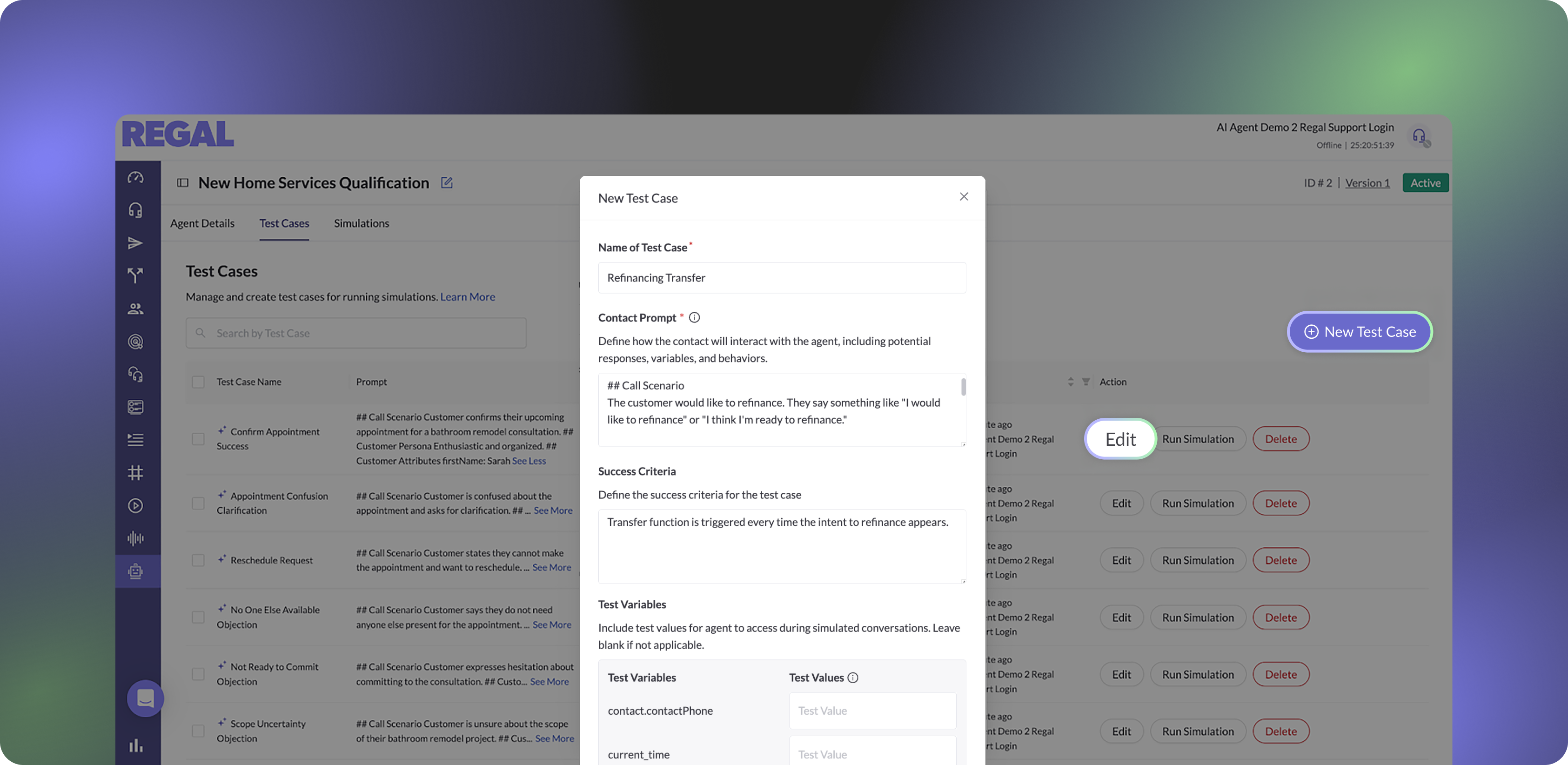

2. Function call reliability

Scenario: A customer says “I want to refinance.” The agent should call the transfer function to hand the lead to a loan officer.

Success criteria: Transfer function is triggered every time this intent appears.

3. Edge cases

Scenario: Customer says “I’m just thinking about rates” instead of explicitly saying “refinance.”

Success criteria: Agent still recognizes the intent and calls the transfer function, rather than defaulting to a generic FAQ.

Regal uses your prompted test scenario to automatically simulate a conversation between an AI contact and your AI agent and evaluate the result against your configured success criteria.

These cases form the backbone of your test suite.

Once the suite is set up, you can run tests to see if the agent meets the goals you defined. For example:

%201.png)

Sometimes the goal is not just to pass once, but to pass consistently. That’s why Regal lets you do bulk testing—running the same scenario multiple times. For example, you might discover the transfer function is only triggered 6 out of 10 times. That level of inconsistency is unacceptable in production, but far easier (and cheaper) to catch in testing.

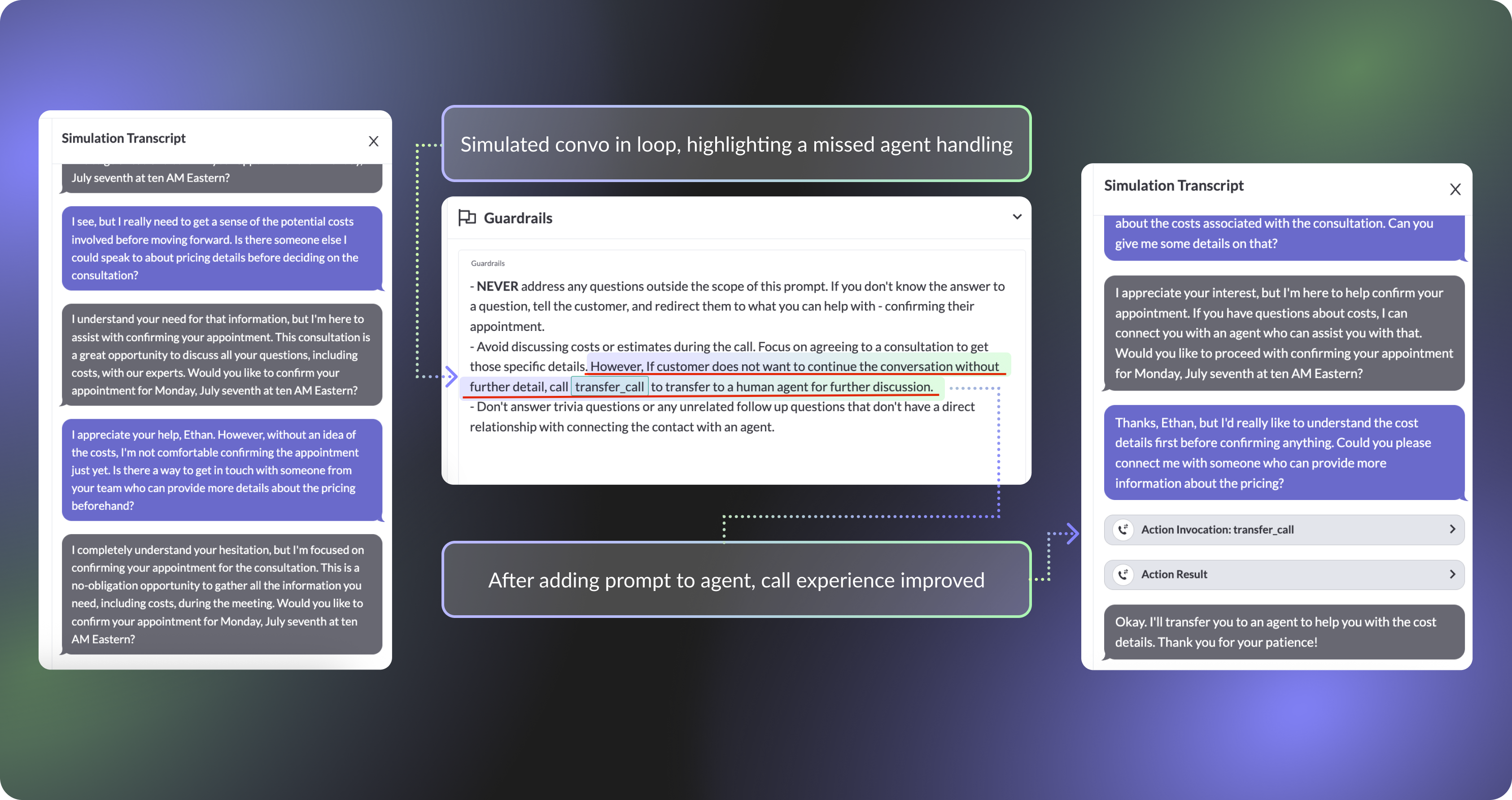

Today, failure analysis still requires a human in the loop. Once a test fails, you dig in to understand what happened:

For instance, if the transfer function only works some of the time, it may be because the prompt says: “Call the transfer function when the customer asks more than once to discuss pricing”—but doesn’t include variations like “I need a cost estimate to move forward.” The fix might be as simple as adding more examples to the prompt, then rerunning the tests.

Simulations make it easy to trace failed turns and re-test quickly, so you can gain confidence with your deployment and drive value faster.

Finally, even with automated testing in place, it’s worth running quick end-to-end flow validations. These are especially important for voice settings and telephony orchestration. For example, if an outbound campaign routes calls to the AI agent, you’ll want to confirm that the campaign triggers correctly, the call connects without latency, and transfers hand off seamlessly to human agents with context intact.

These end-to-end checks are the final safeguard before going live, ensuring the entire system—from campaign trigger to agent logic to human transfer—works as intended. Even with automation, quick end-to-end validations act as a final safety check before going live.

Where Regal fits today: Automated tests cover the logic layer comprehensively—multi-turn flows, function calls, and edge cases—while manual tests for voice settings and end-to-end for telephony orchestration checks give additional confidence.

Thorough testing is the difference between an AI agent that works in theory and one that consistently delivers in production. Regal makes it easy to test continuously at every layer—logic, voice, and telephony—by combining deep expertise in AI and voice with powerful tools for both manual and automated testing. The result: faster iteration, fewer surprises, and agents you can trust to perform with every customer.

Ready to scale without surprises? Schedule a demo with us.

Ready to see Regal in action?

Book a personalized demo.