September 2023 Releases

In 2025, we built the platform and tooling that transformed Voice AI agents from novelty trials into enterprise-ready systems – capable of operating within real customer interactions, enterprise constraints, and business-critical workflows. This shift was not simply about adding “AI features”, but about rethinking how customer conversations are designed, operated, and improved when AI agents are first-class participants. That meant moving beyond scripts and automation toward agents that can reason, act, and adapt within complex workflows and guardrails, while heightening the bar for personalized customer experiences.

The platform now includes tooling for the full lifecycle of AI agents, from development to continuous improvement. Across industries like healthcare, insurance, and home services, customers are using these capabilities to scale lead qualification, ensure every inbound call is handled, and automate high-impact workflows that previously required human capacity. In this blog post, we cover the most important product releases of 2025, focusing on the features that became foundational to how AI agents are built, tested, deployed, monitored, and improved.

.png)

This year, we launched an AI Agent Builder for building and customizing voice, SMS, and chat agents unique to your brand and use cases. The builder gives teams direct control over how AI agents are created, structured, and connected into their customer workflows, without relying on custom code or fragmented tooling.

Key capabilities include:

By centralizing prompts, customer-level personalization, knowledge retrieval, and real-time actions, the builder enables AI agents to deliver structured, personalized experiences that maintain continuity across multi-step conversations. Agent behavior can be defined with clear structure and live context, then extended into downstream integrated CRM or external systems, supporting critical workflows like lead qualification, scheduling, and inbound handling as volume and complexity scale.

For advanced use cases, the Multi-State Agent Builder provides explicit structure and consistency across multi-step conversational flows, making complex conditional paths easier to test, maintain, and update over time. A drag-and-drop interface lets you define states, transitions, and actions visually, while preserving shared global context so agents can reason coherently across the entire conversation without duplicating logic or prompts.

.png)

As our customers pushed the bounds of what AI agents could do, from price negotiation to roadside assistance, the builder evolved to support greater flexibility and control. We introduced multi-LLM support across OpenAI, Claude, and Gemini so agents can be optimized for reasoning depth, latency, or cost, and partnered with leading voice providers ElevenLabs, Cartesia, and Rime to deliver ultra-realistic, low-latency voices adaptable to your brand identity and customer profile.

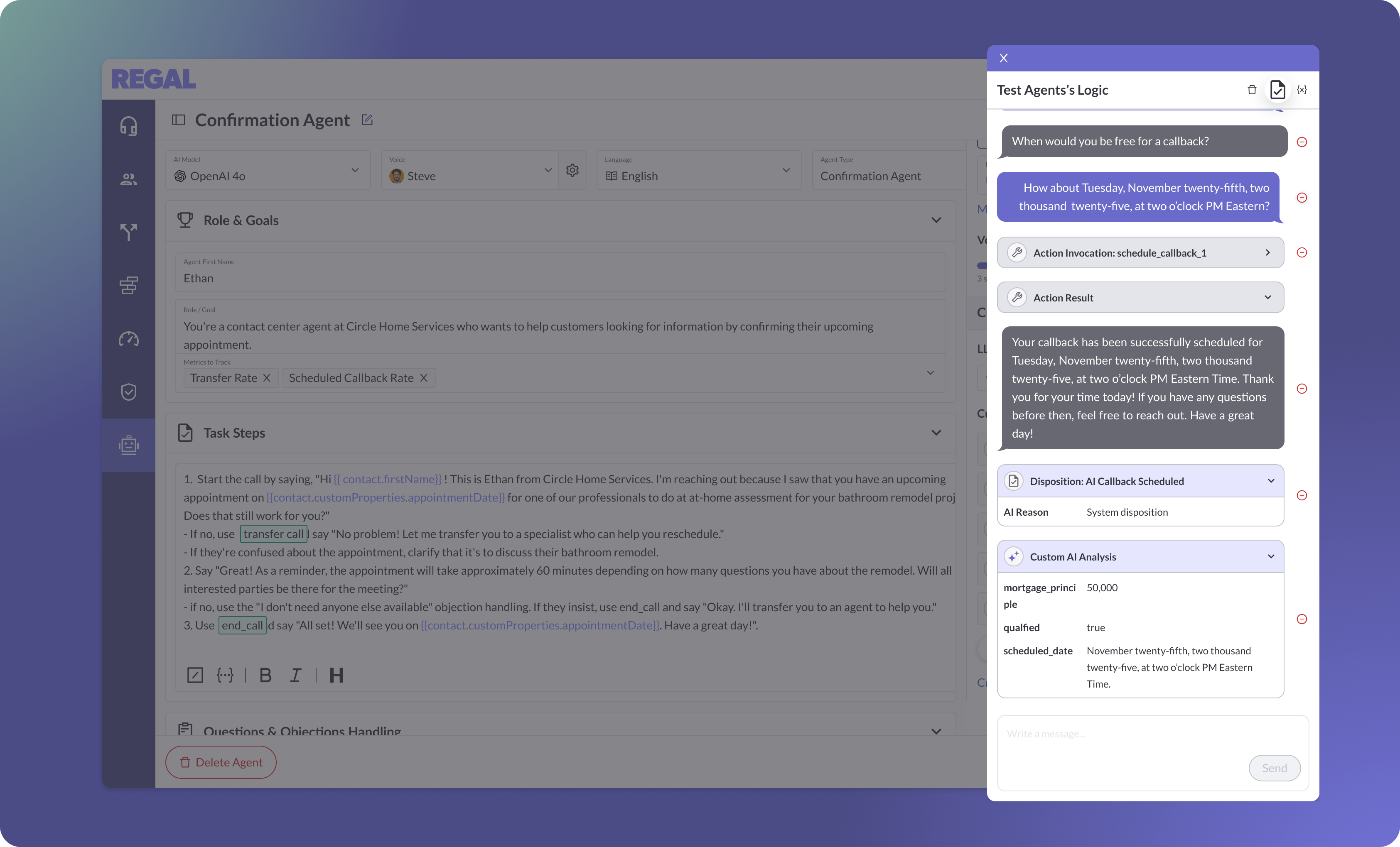

As you build and update your agent’s prompt and settings, the fastest way to assess and improve quality is to experience the agent as a customer would. Test Logic was designed to support this build-time workflow, giving you a direct way to test agents side by side as you adjust prompts, logic, and settings in the Builder.

Through live test chat and voice testing, you can validate the qualitative aspects of the experience that require human judgment—such as tone, pacing, pronunciation, and voice quality—as well as agent speech, action invocations, and dispositioning. This allows you to iterate quickly while maintaining control over how agents sound and behave, ensuring changes translate to the intended customer experience. Test Logic compliments automated testing by focusing on experiential quality early in the build process, helping you catch issues before they propagate into broader testing and production workflows.

As AI agents evolve through frequent prompt updates, logic changes, and knowledge base revisions, validating behavior before deployment becomes a continuous requirement rather than a one-time checkpoint. In 2025, we introduced Simulations to give teams a scalable way to test AI agents end to end before changes reach production, without relying on manual review or bespoke test scripts.

.png)

With this testing suite, Regal automatically simulates realistic end-to-end conversations between AI-generated contacts and your AI agent based on test cases you define. Tests are reusable and can be rerun instantly as prompts, settings, or Knowledge Bases change, enabling continuous validation as agents evolve, while a built-in test generator can automatically create an initial set of scenarios to reduce setup time and ensure meaningful coverage from day one. Each simulated conversation is evaluated against defined success criteria, producing clear pass or fail results with AI-generated explanations that pinpoint the root cause of failures, from logic gaps to incomplete context or failed data extraction.

Deploying agentic workflows extends beyond the AI agent itself to how conversations are routed, data is propagated, and calls are orchestrated at scale. This year, we expanded orchestration across the platform so AI agents can drive progressive dialer campaigns, connect directly to calendaring systems, trigger real-time follow-ups based on structured data, and manage outbound pacing.

The AI Decision Node introduced a flexible way to route and control workflows using AI-derived signals rather than rigid rules. You can evaluate message content, sentiment, language, intent, or any field from the triggering event or contact profile, then dynamically route customers based on that analysis. Whether flagging frustrated callers for human follow-up, routing Spanish speakers to bilingual agents, or prioritizing high-intent leads, the AI Decision Node allows workflows to adapt in real time as conversations unfold. Prompts can be refined with live data, making decisioning more precise as agents learn from production interactions.

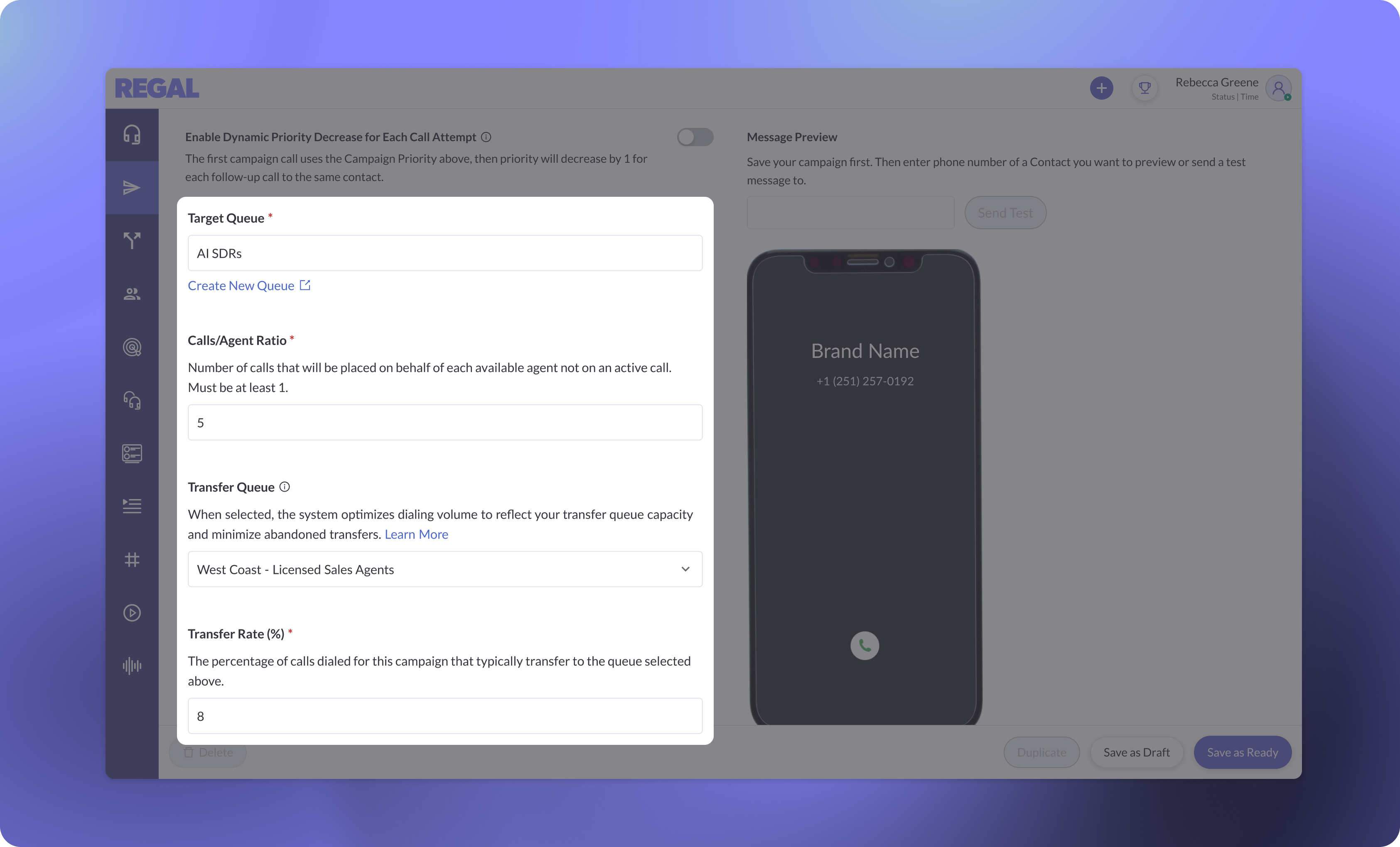

.png)

In many production workflows, AI agents handle the initial interaction but must transfer to licensed or specialized human teams to complete an action, such as closing a sale, updating a policy, or resolving a regulated request. Transfer-Aware Dialing was designed for these scenarios, ensuring AI-driven outreach can scale without overwhelming downstream human capacity. Regal’s dialer continuously accounts for human transfer availability, pacing outbound volume to match real-time staffing. This reduces the risk of abandoned transfers while preserving the efficiency gains of automation, allowing AI and human agents to operate as a coordinated system as call volume increases.

As AI agents handle more customer interactions, understanding conversational outcomes, contact sentiment, and behavioral drift becomes essential. This year, we expanded observability across the AI agent lifecycle to surface those signals through real-time metrics and post-call LLM-driven data extraction.

Custom AI Analysis provides a configurable layer for extracting structured data from AI agent conversations. You can define the specific signals you want to capture such as call reason, objection type, qualification outcomes, or resolution status, and select the LLM used to perform that analysis to maximize accuracy or cost. These signals are extracted automatically from every transcript and made available for reporting, downstream integrations, and post-call workflows, helping you tie conversational behavior directly to business outcomes and personalize follow-up customer interactions. By consistently extracting conversational data at scale, Custom AI Analysis creates a reliable foundation for measuring performance and driving targeted improvements.

.png)

We added real-time agent stats to give immediate visibility into agent productivity, effectiveness, and customer reception. Metrics like Receptiveness to AI provide a live signal of engagement and call containment, making it easier to monitor performance right after go-live, spot issues early, validate changes, and refine agent behavior as AI scales into critical customer-facing workflows.

.png)

Together, these capabilities make agent performance observable and actionable, enabling faster diagnosis of underperformance and clearer validation as improvements are rolled out.

Across the agent lifecycle, we’ve found that improvement after initial deployment is often the longest and most challenging phase. It requires identifying the right issue, determining how to address it, and making the change, and the first step alone can feel like searching for a needle in a haystack. Regal Improve was introduced to shorten that feedback loop, giving you a focused, data-driven way to diagnose issues and make targeted updates without digging through biased samples of transcripts or relying on intuition.

The Conversation Insights dashboard surfaces the patterns that are most prevalent across AI agent interactions. Instead of reviewing calls one by one, you can view aggregated themes such as common objections, unanswered questions, or points of confusion that correlate with poor outcomes. From there, you can drill into representative conversations to understand context and agent behavior at the moment issues occur. This makes it easier to prioritize changes based on impact, whether that means refining prompts, adjusting logic, or improving escalation paths.

.png)

The Knowledge Base Coverage dashboard breaks down how well agents are retrieving and using available information during conversations. Coverage insights highlight where agents fail to find relevant answers, rely on fallback responses, or retrieve incomplete content, helping you pinpoint gaps in your Knowledge Base. By connecting retrieval performance directly to real conversations, Knowledge Base Coverage makes it clear which updates will improve accuracy and containment rates, allowing you to strengthen agent performance with minimal effort.

.png)

2025 was the year AI agents moved from promising experiments to dependable production systems. Across building, testing, orchestration, observability, and improvement, we invested in providing the structure, controls, and feedback loops required to run and manage AI agents at scale. Building on that foundation, 2026 will focus on reducing the manual effort required to get agents live and successful, using AI to accelerate iteration, create delightful customer experiences, and drive business outcomes.

Schedule a demo to learn how AI agents can drive better outcomes across your customer conversations.

Ready to see Regal in action?

Book a personalized demo.